“Fake News – Computer Screen Reading Fake News” by mikemacmarketing is licensed under CC BY 2.0

“Fake News – Computer Screen Reading Fake News” by mikemacmarketing is licensed under CC BY 2.0

Content moderation issues and challenges

With the development of network technology, more and more social media and digital platforms have appeared. More people become Internet users and get their accounts on these digital platforms, not only more users come out, but also more issues will arise. Content moderation on social media is one of these issues. Because of COVID-19, meetings cannot be hosted face to face. Zoom becomes a very famous webinar software for those who need to host meetings. On 6th Aug 2020, when South Australia Tourism Commission was hosting a zoom meeting, in a few minutes, this meeting was inundated with pornography. This meeting was forced to interrupt. “It’s called Zoombombing and it’s been happening fairly consistently around the world” (ABC News, 2020). In this event, “zoom made the mistake of enabling public sessions, passwords, and links shareable on social media” (Leano, 2020). Every day there are thousands of people are using zoom especially those students who are having online classes due to the COVID-19 situation. To avoid such things happen, real-time live human moderation on public forums is necessary. Also, keeping the password and meeting links are also very important.

“106/366–TRC Family Midday Zoom Meeting” by Old Shoe Woman is licensed under CC BY-NC-SA 2.0

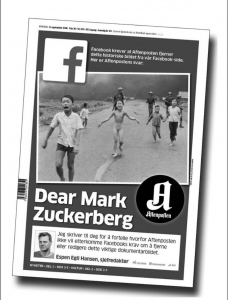

Content moderation is facing a serious situation, some people think too much content moderation restrict freedom of speech. But more often, because of this so-called “freedom of speech”, some users might take advantage of it to promote hate speech or publish some racist speech to break the peace on the Internet. “Moderation is hard to examine” and how to make a proper boundary between content moderation and users rights is a question that needs to be considered (Gillespie, 2018, p.6). Moderation is indeedy hard, for example, The Terror of War, a 1972 photography of a nine-year old Vietnamese girl. This photo was published on Facebook by a Norwegian author and sparked lots of controversies because the girl was naked in the photo and it was considered as violates Facebook’s policies on displaying nudity (Photo, 2021). Facebook deleted this post and stopped the author’s Facebook account twice. This movement triggered more backlash. A Norwegian newspaper criticized Facebook and said that Facebook is not able to distinguish a pornographic image and a war photo. It was the negligence of content moderation. After few days, Facebook reinstated this photo. “In many cases, there’s no clear line between an image of nudity or violence that carries global and historic significance and one that doesn’t”, Facebook Vice President Justin Osofsky explained (Gillespie, 2018. p. 3). This case shows that the dilemma which content moderation is facing now. It is difficult to cater for all tastes. Digital platforms can’t always ignore contents users post on social media and users also should do self-regulation on themselves. It is hard to reach the goal which is to build a peaceful and suitable Internet environment for all people if only just one side pay efforts unilaterally. The biggest challenge for platforms on content moderation is that create and establish a regime (Gillespie, 2018, p.13). All most all of digital platforms’ purpose is to make money. Content moderation might affect the commercial benefits of digital platforms in many ways. At this time, how to make a choice become an important aspect that platforms need to think about and also it can show that a proper and suitable regime is necessary.

Front page of the Norwegian newspaper Aftenposten, September 8, 2016, including the “Terror of War” photograph (by Nick Ut / Associated Press) (Gillespie, 2018. p.2)

The human labor of moderation

There are many ways to do content moderation and human moderation is one of a very big part of all. As the size becomes larger and larger of some digital platforms like Facebook, Twitter and YouTube, they will outsource moderation work. Usually, people who get this work only earn a very little salary and most of them are located in underdeveloped countries, such as the Philippines and India. They are asked for looking at videos or images over five hours a day. The worse thing is that many of them “has been frequently tied to problems such as post-traumatic stress disorder, anxiety, depression and alienation. (Radu, 2019)”. Moreover, some platforms promise that will give responses for disputed content in 24 hours which means these workers need to increase the speed of content moderation, but there are millions of post every day, mistakes happen very easily. They can be very fast, “fast” here can mean mere seconds per complaint—approve, reject, approve (Gillespie, 2018. p.121)”. Obviously, it is hard to be reassuring by users.

American outsourcing tech company located in the Taguig district of Manila, Philippines. Photograph by Moises Saman. © Moises Saman / Magnum Photos. Used with permission (Gillespie, 2018. p.120)

Should government regulation play a greater role in restricting content moderation on social media?

“Platforms are not platforms without moderation” (Gillespie, 2018. p.21). It means all platforms need moderation and regulation. There are many different types of regulation including government regulation, self-regulation and non-governmental organizations (NGOs). Government regulation is the most effective way for regulation, but it is not very welcomed by many Internet companies. A big reason is that there will be a long time for government regulation to start making a policy until the policy can be used on platforms. The CEO of Intel Andy Grove said: “This is easy to understand. High tech runs three-times faster than normal businesses. And the government runs three-times slower than normal businesses. So we have a nine-times gap (Cunningham et al., 2011)”. So, government regulation might influence the the progress of development of the Internet. It’s effective but not efficient. However, government regulation is still necessary on content moderation because some serious issues may directly affect a country’s safety and the leaders of the platforms or companies are not able to be responsible for the situation. “But this task cannot be avoided. No one else can or should do the job” (Sample, 2019. p.23). At this time, the government should stand out to regulate to keep the order.

In addition, only government regulation is not enough. In western countries, free expression is an important human right, too much government regulation might be considered as violating the freedom of speech. So, the third-party organizations regulation is useful, they collect citizens’ wills and make a requirement to companies or government. They can represent the will of citizens and are trusted by them. But, the same thing is that third-party organizations regulation is also not perfect because the final place they make claims is still the companies themselves or the government. It means the decision and actions which are put in practice still depend on companies and the government. Companies and platforms’ self-regulation is also important. Automaticity and independence are emphasized by western countries. So, self-regulation should be the first thing that platforms need to do on content moderation. Although most digital platforms have commercial purposes, they should have a basic morality before becoming a merchant. If not, not only they can’t get more money but also even punished by laws. In order to avoid the work in which lose more than gain, companies should pay more efforts on self-regulation.

Role of Governments on Online Content Moderation. https://www.youtube.com/watch?v=kzuJBUl5LPI

Reference:

ABC News. (2020, August 7). Public forum hosted by South Australian Tourism Commission “Zoombombed” with pornography. https://www.abc.net.au/news/2020-08-07/sa-tourism-commission-zoom-bombed-with-pornography/12533898

Cunningham, L., Cunningham, L., & Cunningham, L. (2011, October 1). Google’s Eric Schmidt expounds on his Senate testimony. Washington Post. https://www.washingtonpost.com/national/on-leadership/googles-eric-schmidt-expounds-on-his-senate-testimony/2011/09/30/gIQAPyVgCL_story.html

Gillespie, T. (2018).All Platforms Moderate. In Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media (pp. 1-23). New Haven: Yale University Press. https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300235029-001

Gillespie, T. (2018). The Human Labor of Moderation. In Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media (pp. 111-140). New Haven: Yale University Press. https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300235029-005

Leano, M. (2020, October 9). 5 Examples of Content Moderation Failures that Prove Why Content Moderation is an Essential Tool. Linkedin. https://www.linkedin.com/pulse/5-examples-content-moderation-failures-prove-why-essential-leano

Photo, T. (2021, April 29). The Story Behind the “Napalm Girl” Photo Censored by Facebook. Time. https://time.com/4485344/napalm-girl-war-photo-facebook/

Radu, S. (2019, August 22). Social Media’s Dark Tasks Outside of Silicon Valley. U.S.News and World Report. https://www.usnews.com/news/best-countries/articles/2019-08-22/when-social-media-companies-outsource-content-moderation-far-from-silicon-valley

Role of Governments on Online Content Moderation. (2021, September 22). [Video]. YouTube. https://www.youtube.com/watch?v=kzuJBUl5LPI

Samples, J. (2019, April 9). Why the Government Should Not Regulate Content Moderation of Social Media. Cato Institute. https://www.cato.org/policy-analysis/why-government-should-not-regulate-content-moderation-social-media

The issues and challenges that digital platforms are facing on content moderation by Wenyi He is licensed under a Creative Commons Attribution 4.0 International License.