Introduction

Social media platforms enable users to create personal profiles, produce and share content ranging from images to texts, videos, and graphics, and form networks and social connections. Social media operators moderate content by allowing users to post and share specific content that agrees with their codes of conduct, copyright laws, and extend positive social values while prohibiting the sharing of content that solicits illegal activities, spreads hate, and online dissent, and violate copyright laws. As private companies with their policies and governing laws, social media platforms have explicit authority to determine what is shared on their platforms. On the other hand, governments’ involvement in general content moderation is gaining increased attention as governments spread misinformation on these platforms. There exist opinionated views regarding content moderation by media on users and of platforms by governments. While others view content moderation as the solution to pervasive misinformation and the growth of hate and extremist views, others argue that content moderation by and of platforms suppresses speech and freedom of expression. Suppressing speech consequentially subjugates democracy and reverses fundamental gains made concerning freedom of speech and expression. To increase social virtues, protect freedoms of expression, and support democracy, it is vital to support online content moderation by platforms but reduce pervasive moderation by governments.

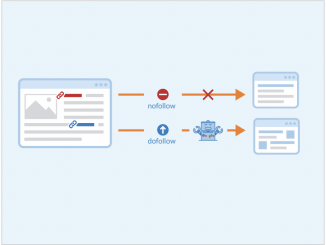

Content moderation (TechAhead Team, 2020)

Content moderation (you can click on the link to find out more information)refers to the review and management of user-generated content and activities on social media platforms to determine whether a particular post or communication is permissible or not. Content moderation provides a safe environment for users, brands and platforms by ensuring that all online content falls within acceptable standards and follow established guidelines that respect privacy, morality, and copyright laws. Today, the role of governments in content moderation is increasingly becoming important as governments seek to manage misinformation, control the speech, and regulate misuse of online speech. However, liberal theorists argue that pervasive government influence in content moderation is harmful to the democracy and the freedom of expression because governments limit free speech. Therefore, while content moderation is important, governments should not play a pervasive role in online regulation and moderation.

Attempts to implement content moderation

In 2019, the Australian government imposed strict policies that hold social media platforms responsible for the content shared on their websites. The regulation holds platforms liable for serving as conduits through which their users propagate hate speech, incite violence, make defamatory comments, use obscene language, and link to external government agencies. The European Commission implemented Copyright and related single rights in the Digital Single Market (CDSM Directive) to establish the liability of media platforms like YouTube on the content shared on their sites. The CDSM directive makes digital platforms “directly liable” for the content uploaded by their users. Platforms must, therefore, directly authorize the content posted on their websites or make efforts to ensure that the users abide by relevant policies and authorizations before posting. In case uploads are made without their consent, the platforms must make applicable provisions to ensure the information is made unavailable from their services to reduce their liability. However, controversy arises on the legal standards within the European Union, with countries such as Poland challenging the legitimacy of these policies within independent jurisdictions with already developed legal regulations regarding the same (Geiger & Jutte, 2021).

On the other hand, the US Congress has made several attempts to moderate online content by holding social media platforms liable for the content shared on their websites. Congress has cited instances of heightened misinformation during the Covid-19 era and how this affected the public perception and disease control in many parts of the country (Gallo, 2021). Congress is therefore debating a bill to systemically review section 230 to regulate social media platforms and establish strict liability on their side on the information shared on their platforms. Acts such as “The Eliminating Abusive and Rampant Neglect of Interactive Technologies” of 2019 are strongly contested due to their potential to break strong encryptions adopted by companies and infringe their privacy (Mullin, 2020). Critics also argue that such regulations would drastically reduce the freedom of speech and threaten online security once implemented.

Social media platforms already have policies that regulate content on their websites concerning child sexual exploitation, hateful speech, extremist views, and graphically violent content. Facebook often reviews content by public figures such as celebrities and politicians that potentially violate fundamental social policies. Earlier this year, Facebook indefinitely suspended former US President Donald Trump’s account following comments that incited violence and risked public safety. Platforms like Twitter and WhatsApp periodically release reports containing their content moderation practices. In 2019, Twitter and Instagram released guidelines and policies meant to reduce hate speech and bullying on their websites. Facebook consorts with third-party checkers to assess and review the truthfulness of information shared on the platform. Twitter displays the source of almost all the information shared on the platform to ensure each tweet is directly linked to the originator. They also rely heavily on AI technologies to regulate content. All these attempts by social media operators protect the online community from harmful misinformation and spread public interest values.

Role of Governments

Liberal theorists argue that modern values should favor democracy by creating a favorable condition for debate and civil controversy to thrive. Therefore, the role of governments in media regulation should not be extended because it has a considerable potential to reduce modern liberal policies and subjugate democracy. The idea of creating regulatory oversight bodies and government agencies that exile politically disfavored opinions should be rejected. Gillespie (2018) argues that much of the internet’s anticipated and spectacular growth has been attributed to social media platforms’ immunity against pervasive government infringement. Further, extending the government’s role in media moderation can overpower the democratic precepts of free speech and freedom of expression. Most defenders of free speech hold the opinion that free speech should never be regulated because such a move can exponentially reduce the integrity of social knowledge and content. Increased moderation will increase censorship, limit speech, and silence civil societies and government critics. Honstein (2011) argues that this is because most governments are critical to dissent, even if it is positive dissent. Subjugating entire masses to follow particular communicative modes is harmful to the growth of democracy and human rights. Therefore, increasing the role of government in media moderation is likely to hinder democracy and limit human rights. An excellent example of the dangers of pervasive government control in media regulation is China’s restrictive approach to social media platforms. The government’s over-control of social media has produced detrimental effects on the ability of citizens to access information, realize their rights, and express their freedoms of thought and expression (Patel and Moyniham, 2021). This has drastically reduced democracy in the country, as evidenced by the growing political powers associated with the ruling party.

Conclusion

While social media platforms must regulate, monitor, and screen user-generated content to reduce instances of child sexual exploitation, hate speech, and words that incite violence, and reduce moral degradation as a result of pornographic proliferation, it is essential for governments to restrict their role in the platform and content moderation. Governments should only set frameworks that stipulate acceptable content standards and set back, leaving social media platforms to perform moderation duties. It is crucial to separate governments from independent media platforms because of the vast potential that the internet has in advancing democracy and positive government criticism. Examples from countries such as China, where the government plays the predominant role in content moderation, show the dangers of pervasive government control. Liberal societies should therefore support content moderation by platforms but criticize content moderation by governments.

Student name:Melissa Wu

本作品采用知识共享署名 4.0 国际许可协议进行许可。

References

Dwoskin, E. (2021, June 4). Trump is suspended from Facebook for 2 years and can’t return until ‘risk to public safety is receded.’ The Washington Post. https://www.washingtonpost.com/technology/2021/06/03/trump-facebook-oversight- board/

Gallo, J. (2021). Social Media: Misinformation and Content Moderation Issues for Congress. https://crsreports.congress.gov/product/pdf/R/R46662

Geiger, C., & Jutte, J. (2021). Towards a Virtuous Legal Framework for Content Moderation by Digital Platforms in the EU? The Commission’s Guidance on Article 17 CDSM Directive in the light of the YouTube/Cyando judgement and the AG’s Opinion in C-401/19. https://poseidon01.ssrn.com/delivery.php?ID=4990841271020751220760730991070160 280360540680060690161240651221120031060240810670730001230071010380430290 251090030990681060311140420120920281071190940290171110110220530070390801 110281000671050990120260860970000910871220280911210830791180040030240821 19&EXT=pdf&INDEX=TRUE

Gillespie, T. (2018). Custodians of the internet: Platforms, content moderation, and the hidden decisions that shape social media. Yale University Press.

Gillespie, Tarleton (2017) ‘Governance by and through Platforms’, in J. Burgess, A. Marwick & T. Poell (eds.), The SAGE Handbook of Social Media, London: SAGE, pp. 254-278

Mullin, J. (2020, December 28). In 2020, Congress threatened our speech and security with the “EARN IT” act. Electronic Frontier Foundation. https://www.eff.org/deeplinks/2020/12/2020-congress-threatened-our-speech-and-security-earn-it-act

Patel, C., & Moyniham, H. (2021). Restrictions on online freedom of expression in China The domestic, regional and international implications of China’s policies and practices. https://www.chathamhouse.org/sites/default/files/2021-03/2021-03-17-restrictions- online-freedom-expression-china-moynihan-patel.pdf

TechAhead Team. (2020). Content Moderation: What is it and why your business needs it. TechAhead. https://www.techaheadcorp.com/blog/content-moderation/