The past decade has seen the rapid development of the internet as a free speech platform. Compared with traditional media, users can be engaged with the internet as content creators besides consumers. However, questions were raised about whether the quality of uploaded content is acceptable. To address this issue, content moderation become increasingly important that each platform has its own content governance rules on content moderation. The negative content has been regulated by the platforms in order to maintain the digital environment. Nevertheless, the field of content moderation become controversial as the issues revealed the fairness of the regulations on different platforms, the job in its field faced tremendous impacts and the freedom of creation has been restricted. After all, I will reflect on the possible improvements with government intervention in respect of these issues.

What are the Issues?

- The bias of the platform regulation. Each platform has distinct regulations based on content moderation. Since online platforms set guidelines that do not catabolize their profits and benefits, such guidelines can mislead the audience’s opinion toward the negative consequences of the uploaded content. Multistakholdners such as internet bodies, industry bodies, platforms, each of whom holds different views of inspection. Moreover, the bias that exists in the companies standards can result from users’ moral behaviors. For instance, the bias from the workers’ decision on the content moderation standards can affect the overall content(Dvoskin, 2019). If there is a group of workers has discrimination, they will ignore the content as such. misinformation prompting further the public opinion and spread of contents.

- The ethical issues behind content moderators. The experience of content moderators caused psychological impacts and serious mental health. The work of content moderators is stressful, the process of monitoring and identifying offensive content such as violence, murders, sexually explicit content, and so on (Roberts, 2019). The well-being of moderators is neglected by the platforms (Newton,2019). Each day moderators will review the disturbing content again and again.

“I thought it will be a fun job but none of it was business, it was graphic violence, hate speech, sexual exploitation, abusing animals, murders.”

“Nobody said anything about mental health.”

“You always see death, every single day.”

“I had night terrors almost every night”

According to the The Verge (2019), it revealed the moderator behind the screen, many undertake pressure, suffer pain without telling anyone. The companies does not care about the workers mental health as well as the complaints about the work, all they care is about the removal of contents. Moderators have no freedom to manage their schedule, one of the reporter revealed that the inspector will watched you from the camera (The Verge, 2019). Content moderation not only affect users who watch the disturbing context, but also workers who suffer mentally in this field (Jillian and Corynne, 2019).

Source: The Verge (2019)

3. The restriction of freedom creation. The content was regulated by the platforms which reduced the freedom of speech. The tendency of guidelines will be preferred to the majority. However, the minorities opinions will be rejected by the platforms. The restriction of content is determined by the standards of the platform. The creation can be buried in society. The content moderation limits the right of social opinion from different regions and different communities (Pinkus, 2021) .

How can government intervention improve these issues?

Source: Cuff, M.(2017)

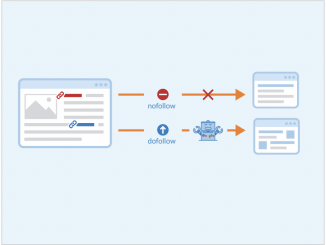

Firstly, government controls the internet bodies in order to reduce the issue of bias decisions from platforms. The laws implemented by the government are based on justice, consistency ineffective way to all platforms. Compared with the rules established by companies that work for their own profits, the power of government is more effective and fair to the overall internet environment. Besides, the standards that government set is well justified for all users and platforms.

Secondly, the government gives support to the job of content moderators. Because the job of moderators was hidden in the society, the occupation is not fully complete in the society, moderators can not share the work it involved (The Verge, 2019). In order to address this issue, the government can give support from laws. The moderators will be recognized socially with respect. Besides, the distribution of the work content should be carefully selected. The background of content moderators searched priorly before giving the work. For example, if a person adopts animals, then the work can not involved abusing animals.

Also, for people who lost family members in the war, the content can not be violence, etc. Different people can handle different things that would not be affected. Another way to improve this issue is the psychological test and mental support, online moderators can attend the psychological test that would identify the pressure they can undertake, or they are fully prepared for the field. Moderators will have more rights and respect in this field of work. Additionally, moderators can work partially rather than concentrate on the whole day. More importantly, the technological development of AI can initially categorize the negative content and then be specified by a human. However, the current technology of artificial intelligence is not fully ‘intelligent’ as we thought to replace the dangerous job of moderators.

Lastly, the government can established legitimate laws in control of the content of creation. Because platforms will remove the negative comments in relation to their benefits, government plays a role in the execution in order to maintain the consistency of the online creation. For instance, American president Donald Trump posted comments about Facebook, the company has removed the comment in the platform and banned his account(BBC, 2021). Therefore, it is well justified from government regulations in consider with the platform standards to the public audience.

References:

BBC. (2021). Facebook’s Trump ban upheld by Oversight Board for now. Retrieved from:https://www.bbc.com/news/technology-56985583

Dvoskin,B. (2019). The Thorny Problem of Content Moderation and Bias. CDT. Retrieved from: https://cdt.org/insights/the-thorny-problem-of-content-moderation-and-bias/

Jillian, Y., Corynne.(2019). Content Moderation is Broken. Let Us Count the Ways. Retrieved from: https://www.eff.org/zh-hans/deeplinks/2019/04/content-moderation-broken-let-us-count-ways

Robert, S. (2019). Behind the Screen: Content Moderation in the Shadows of social media. Retrieved from: https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300245318

Newton, C.(2019). The secret lives of Facebook moderators in America. The Verge. Retrieved from: https://www.theverge.com/2019/2/25/18229714/cognizant-facebook-content-moderator-interviews-trauma-working-conditions-arizona

Multimedia References:

https://www.businessgreen.com/analysis/3005532/has-the-government-just-put-a-blockade-in-the-path-of-environmental-justice

New report casts light on the dark job of Facebook content moderators

This work is licensed under a Creative Commons Attribution 4.0 International License.