Content moderators: the gatekeepers of social media | Gianluca Demartini | TEDxUQ

Source: Youtube – https://youtu.be/ajjov8Ve4Ik

To many individuals, internet stands for a number of different things. Some praise internet significantly enhances the freedom of speech. People can hide behind key boards and screens to voice their genuine thoughts and opinions. To some, internet means a higher level of sharing. Internet is the foundation of many online platforms such as social media, blogs, discussion forums and more. Information can be shared with thousands and millions of people within a few seconds. However, while people are freely expressing their thoughts about literally anything and anyone, some of them risk abusing such freedom. It is not hard to imagine that people can be driven by their own flaws such as selfishness and dishonesty to release false information and news. Under some extreme circumstances, individuals can launch vicious back and forth argument.

A number of harms can be produced due to such “freedom of speech”.

- When individuals leave objectionable and inappropriate contents on the digital platform such as an official website of a business organization, such contents can harm the reputation of the business organization and their brand power, especially for market place typed digital platforms. Other viewers might change their mind in purchasing, resulting in damage to the business organization.

- Sometimes, online platform users leave unchecked information or one-sided story, known as fake news on the website, which can be understood as a reflection of poor quality of the digital platform, resulting in damaging the reputation of the digital platform. For instance, during 2016 and 2020 Presidential Elections, Facebook allowed their users to post unchecked information and even fabricated information to mislead other users, which not only tarnish the reputation of Facebook but also cause people to question the validity of the information in the social media (Kurtzleben 2018). According to Pew Center, people’s trust on the information of social media platform has dropped low.

- People sometimes abuse the freedom of speech by posting personal attacks and threatening and assume they can hide behind keyboards and screens without taking responsibility. Such content can lead to real dangers in reality.

In order to minimize aforementioned harms and create a clean digital environment featured with authenticity and safety, content moderation is developed and adopted by a growing number of digital platforms. Before exploring potential benefits associated with the content moderation, it is critical to understand what is content moderation. Often digital platforms relying heavily on the user generated content are advocates of content moderation such as marketplaces and social media platforms.

Content moderation refers to a process of automatic checking and scrutinizing the contents posted on the digital platforms before being released to the other viewers to ensure the content posted is legal and in compliance with the regulations of the website (Gillespie 2020). As more and more digital platforms adopt content moderation, not all online users are excited about the online content moderation for various reasons. Some other issues are also clouding the digital platforms without an effective content moderation such as cyber bullying, harassment, discrimination and racialism, and even pornography and other illegal contents.

However, one coin has two sides. Content moderation is also challenged by a number of issues raised by the public, the online platform users and the advocates of freedom of speech. Content moderation, as a newly rising online content monitoring, checking and controlling system, is questioned by the public for a number of controversies.

Figure 1: Challenges associated with content moderation by Eunice Fang, licensed by is licensed under CC BY 2.0

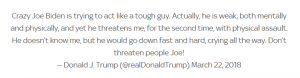

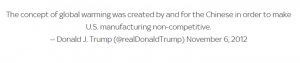

- Controversy one: Double standards for content moderation

Despite that the purpose of content moderation aims to create a clean online environment for the growing online users. All posts are supposed to be submitted through the content moderation system before publication. In other words, no one should be exceptional for the content moderation system. However, content moderation system is operated and controlled by the online digital platforms such as various social media technology companies. Social media platforms often make profit based on the online users, the exclusive news, and the popularity of the users also known as the celebrity effects. Therefore, in order to attract more users and spotlights, double standards might be adopted in the content moderation based on the celebrity effect of the users. For instance, as demonstrated in Figure 2 and 3 below, president Trump had constantly made inappropriate posts on his personal twitter account to not only release false information but also launch personal attacks. Figure 1 indicates that Donald Trump called former vice president, and current president of the US “Crazy”, “Weak”, “Crying all the way”. All those words are considered inappropriate to voice to another personal, less alone from one current president of the US to another former vice president of the US. Figure 2 indicates that Trump provided false news about the global warming. Trump basically denied the existence of global warming despite of substantial scientific data available. Trump’s inappropriate posts should be gone through content moderation system before being posted and available for viewers. However, Trump, as a celebrity president, has million of followers and people were eager to see what he would say and do next. Therefore, Twitter made an exception to him. Similar exceptions are expected to be made for other celebrities on social medias. Double standards are adopted by the online digital platforms, resulting in discrimination based on the power and influences and jeopardizing the equality.

Figure 1: Inappropriate posts by Donald Trump on Twitters, By Arm Coles, licensed by is licensed under CC BY 2.0

Figure 2: Inappropriate posts by Donald Trump on Twitters, By Arm Coles, licensed by is licensed under CC BY 2.0

- Controversy one: Content moderation costs economic losses

Depending on the nature of the online platforms, content moderation can lead to substantial economic losses to technological companies, especially social media. Social media relies on the posts of the online users to make profits and seek development. As more and more social medias have been created, the competition of social media has been intensified. Content moderation can go to far and lead to unsatisfied customers. According to research conducted on the content moderation, “significant impacts of content moderation that go far beyond the questions of freedom of expression that have thus far dominated the debate” (Myers West, 2018, p4366). In other words, it is difficult to control the level of the content moderation, which can lead to excessive or inadequate content moderation in reality.

- Controversy three: A threat to freedom of speech

Content Moderation And Free Speech | Patriot Act with Hasan Minhaj | Netflix

Source: https://www.youtube.com/watch?v=5CQ5-NMzG8s

According to the Supreme Court in 2017, “the vast democratic forums of the Internet in general, and social media in particular,” are “the most important places . . . for the exchange of views” (Langvardt, 2017, 1353). This opinion is shared by Tom Standage, deputy editor of The Economist, who claimed that

“[Social media] allow information to be shared along social networks with friends or followers (who may then share items in turn), and they enable discussion to take place around such shared information. Users of such sites do more than just passively consume information, in other words: they can also create it, comment on it, share it, discuss it, and even modify it. The result is a shared social environment and a sense of membership in a distributed community” (cited in Samples 2021).

Digital platforms have played a growing role for people to exchange their ideas and debate about issues which matters to the society and the people. Despite of location and time, races, and backgrounds, people from various social and geographic backgrounds can exchange their ideas and opinions without being pressured like face to face. However, with the content moderation, many individuals might be suppressed for their valid thoughts, resulting in some loss of freedom of speech.

The role of governmental authorities

Content moderation, as mentioned previously, means to check the content before post, by complying the regulations of the online digital platform and relevant laws. Depending on individual online platform, each online website might need to customize their content moderation system. However, government should take the responsibility of developing a comprehensive guideline detailing the overall goal and guidelines of establishing the content moderation. On the other hand, flexibility should be left to private digital platforms to detail necessary content moderation.

References

Fagan, F. (2020). Optimal social media content moderation and platform immunities. European Journal of Law and Economics, 50(3), 437-449.

Gillespie, T. (2020). Content moderation, AI, and the question of scale. Big Data & Society, 7(2), 2053951720943234

Langvardt, K. (2017). Regulating online content moderation. Geo. LJ, 106, 1353.

Myers West, S. (2018). Censored, suspended, shadowbanned: User interpretations of content moderation on social media platforms. New Media & Society, 20(11), 4366-4383.

Kurtzleben, D., 2018. Did Fake News On Facebook Help Elect Trump? Here’s What We Know. [online] Npr.org. Available at: <https://www.npr.org/2018/04/11/601323233/6-facts-we-know-about-fake-news-in-the-2016-election> [Accessed 15 October 2021].

Samples, J., 2021. Why the Government Should Not Regulate Content Moderation of Social Media. [online] Cato.org. Available at: <https://www.cato.org/policy-analysis/why-government-should-not-regulate-content-moderation-social-media#notes> [Accessed 15 October 2021].

![]()