Due to the participatory cultures in the Web 2.0 age, user-generated content has become dominant in the Internet ecosystem. Nevertheless, the mixing online discourse puts people increasingly at risk of being exposed to violent, pornographic, disturbing, and hateful content. Thus, the platforms’ obligation of content moderation becomes an issue worthy of discussion. This article will discuss platforms’ attempts to implement moderation and the controversies over them; in the end, it recommends governments more engage in content moderation.

Platform Content Moderation Attempts: Combinations of Volunteering & Commercial, Labour & AI

Flag

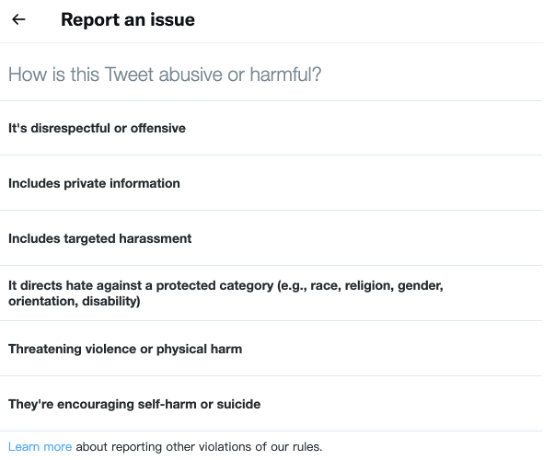

Platform operators have higher requirements for moderators due to the ever-increasing expansion of the online community scale. Thus, with the massive user base as their best resource, almost all platforms build a “flagging” governance mechanism that invites users to report offensive content (Gillespie, 2017). Users can flag the concerned content within the pre-defined rubric. Those obviously inappropriate content will be directly detected by the system’s harmful information bank and deleted. The human screeners will take over ambiguity content for further review.

Controversies over the Flag

The flag seems to be the best answer for platform operators in the info-flooded cyberspace to deal with immense and constantly changing content. However, the flag attempt has not escaped controversies and criticism from academics. Gillespie (2017, p.4) points out that “while flagging and reporting mechanisms do represent a willingness to listen to users, they also obscure the negotiations around them.” Specifically, in addition to a few platforms such as Wikipedia and Reddit present the public debate over the flagged content, most platforms’ operation towards the flagged content is just “delete,” “review – delete,” or “not for processing” without any public announcement, thus does not offer users opportunity to defend their comments (Gillespie, 2017). Even worse, the platform’s opaque operation fuelled the abuse of flags. Fandoms’ practice on Weibo is a powerful example. Weibo is a Chinese microblog platform like Twitter. As the leading platform that aggregates various fandoms discourses and celebrities’ information in China, the public discourse on Weibo is scrutinized by fandoms. When the fandom notices derogatory comments about their favorite star, they band together to flag the comments – whether they violate any community guidelines. While the moderator, faced with hundreds or thousands of complaints about the same content, is probably to record it as a violation and remove it because of a certation error processing or merely because of the cumulative effect (which is actually also opaque). In short, the flag mechanism is controversial. For one thing, users often witness content quietly disappear without giving the creator any chance to argue (Gillespie, 2017); for another thing, the flag is abused as a function for users to express dissatisfaction, due to which insecurity of the platform content creators may creep in.

Commercial Content Moderation

Another attempt companies take for content moderation is commercial content moderation (CCM). As Roberts (2016) defines, CCM is a series of for-profit practices conducted by CCM labourers or firms, taking content moderation and screening as the core and ensuring users’ adherence to community rules. Usually, CCM labours work to review flagged content further, so they are the last defense against harmful content, ensuring that the platform can detect deeply hidden content. Whereas there are several concerns in academics.

CCM Labours’ Rights

CCM workers are hardly entitled to the labour and employment protection. Specifically, platforms frequently establish outsourcing relationships with CCM workers by publishing work tasks on micro-labour websites or firms such as Amazon Mechanical Turk, Upwork, or oDesk, where the labours are generally low-status low-paid, and non-regular workers (Roberts, 2019). Even worse, exposed to harmful content thousands of times per day, many content moderators accuse them of suffering great psychological trauma but can hardly get assistance from companies (Messenger & Simmons, 2021). In recent years, there have been several related appeals from CCM workers to platforms.

Policing The Web’s Darkest Corners: The Internet’s Dirtiest Secret – Source: YouTube

Vague Rule of the Moderation

Considering that CCM labours work within the platform’s guidelines, they essentially are asked to safeguard platforms’ interests. Thus, even if some contents engage in hate speech, they might survive because of platforms’ potential benefits (Roberts, 2016). A good case is the meme video series “Sh*t Black Girls Say.” The original video was created by comedian Billy Sorrell, in which he dressed up as a woman and listed some “fashionable” sayings of woman in colour in a ludicrous way. This blackface video might be categorized as racism, but YouTube didn’t take any action, probably due to the 7 million views it brought for the platform. Or, perhaps, as Roberts (2016) argues, if a platform is too restrictive in users’ opinions, it risks losing advertising opportunities.

While the vague rule is also embodied in another divergence of the platform and users, the legitimate contents in users’ eyes may sometimes be removed by the platform. This is a fatal issue to platforms because it directly challenges social media platforms’ original purpose to be an impartial intermedia for speech. In this case, platforms’ arbitrary removal or restriction will trigger users’ distrust towards the platform, also spawns users’ concern about biased censorship (Stewart, 2021).

AI Tools

“Over the long term, building AI tools is going to be the scalable way to identify and root out most of this harmful content.” (Zuckerberg, 2018)

Due to the defects such as small scale, slow progress, and inconsistent screening standards of manual moderation, more and more platforms regard AI as salvation in the moderation field. Indeed, tech giants’ AI attempt has been shown effective: YouTube, by 2019, 98% of the videos YouTube delete for violence are detected by AI; also, 99% of ISIS and Al Qaeda-related terrorism speech which Facebook deletes is reported by AI before any other flag in the community (GIFCT, 2019). While platforms have shown good playing in embedding algorithms in content moderation, there is inescapable controversy over AI’s drawbacks.

Algorithm Discrimination

Algorithmic discrimination in media presentation is reflected as labeling faces of black people as “primates” or when searching for black people, the comments accompanied by are always negative, etc. At the same time, it is also worth pondering the unfairness or discrimination caused by algorithms in the content moderation process. In content moderation, one possible scenario is that harmful content categorization algorithms might disproportionately flag a particular group’s expression and therefore be more likely to delete that group’s speech (Gorwa et al., 2020). As such, AI moderation might lead to more entrenched Internet structural inequalities by amplifying the possible subtle bias of algorithm designers.

Decontextualised Algorithms

The algorithm is sometimes unreliable because it cannot identify the deep context (Patel & Hecht-Felella, 2021). For example, Facebook once mistakenly deleted an article about breast cancer because its algorithm recognized images of breasts (Patel & Hecht-Felella, 2021). In a similar mistake, after Facebook added new sensitive words during the COVID-19, the algorithm detected a scientist using related sensitive words in a 2015 scientific discussion, thus flagged his 2015 article as false information and removed it (Folta, 2021). In Gorwa’s (2020) work, he further subdivides the decontextualization and defines a related concern as “de-politicisation.” Gorwa (2020) illustrates that when platforms claim to have actively eliminated most terrorist propaganda, they ignore the significantly political issue of who is regarded as a terrorist. In a word, algorithmic work can occasionally be rendered ineffective or wrong because of its isolation from the various contexts.

Moving Forward: Government Should Take a Greater Role

While platforms make various efforts, including the flag, commercial content moderation, and AI, all these actions are intrinsic to firms, and all arise inevitably issues. Some scholars argue that most platforms would not proactively self-regulate without external pressure (Cusumano et al., 2021). Indeed, the effectiveness of self-moderation can be suspected by plenty of scandals such as Gamergate, The Fappening, violence to Rohingya in Myanmar, etc. The main reason is that platforms lack an incentive to moderate content without compromising their interests (Cusumano et al., 2021). Even worse, when facing a conflict between interests and content management, most commercial platforms may think that compliance is high-cost and intentionally disregard their regulatory obligations (Cusumano et al., 2021). The threat from the legislation is the most effective incentive to push platforms to pay attention to the content (Cusumano et al., 2021). Thus, this article recommends governments take a more significant role in moderation.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Reference:

Cusumano, M. A., Gawer, A., & Yoffie, D. (2021). Can self-regulation save digital. platforms? SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3900137

Folta, K. (2021, June 20). Facebook: Science is Not Welcome Here? – Kevin Folta. Medium. https://kevinfolta.medium.com/facebook-science-is-not-welcome-here-c01c3e9a2619

Gillespie, T. (2017). Governance by and through platforms. In J. Burgess, A. Marwick&. T. Poell (eds.), The SAGE Handbook of Social Media, London: SAGE, 254-278.

Global Internet Forum to Counter Terrorism | About. (2019). This Is a Perma.Cc Record. https://perma.cc/44V5-554U

Gorwa, R., Binns, R., & Katzenbach, C. (2020). Algorithmic content moderation: Technical and political challenges in the automation of platform governance. Big Data & Society, 7(1), 205395171989794. https://doi.org/10.1177/2053951719897945

Messenger, H., & Simmons, K. (2021, May 11). Facebook content moderators say they receive little support, despite company promises. NBC News. https://www.nbcnews.com/business/business-news/facebook-content-moderators-say-they-receive-little-support-despite-company-n1266891

Patel, F., & Hecht-Felella, L. (2021, September 28). Facebook’s Content Moderation Rules Are a Mess. Brennan Center for Justice. https://www.brennancenter.org/our-work/analysis-opinion/facebooks-content-moderation-rules-are-mess

Roberts, Sarah T. (2016), “Commercial Content Moderation: Digital Laborers’ Dirty Work”. Media Studies Publications. 12.

Roberts, Sarah T. (2019) Behind the Screen: Content Moderation in the Shadows of Social Media. New Haven, CT: Yale University Press, pp. 33-72.

Stewart, E. (2021). Detecting Fake News: Two Problems for Content Moderation. Philosophy & Technology. Published. https://doi.org/10.1007/s13347-021-00442-x