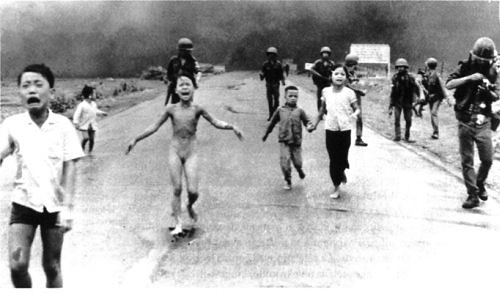

“The Terror of War” by Nick Ut, 1972 is also commonly known as “Napalm Girl”. This is a picture that has changed warfare history. In the photo, children are running away from the napalm attack with anguished looks on their faces. The most dominant part is the naked girl who has suffered from the napalm bomb, running and crying. Her body was mostly burnt, skin is falling apart. The photo indicated the horror of the Vietnam war. Tom Egeland has included this photo in one of his journals in 2016. And just because of a combination of such vivid pain and the underage nudity that prompted Facebook administrators to remove Egeland’s post. This photo is controversial after it was posted on Facebook. Facebook moderators classify it with nudity and violence. Many people argued that Facebook shouldn’t make this decision since Nick Ut’s photo has great historical and emotional significance.

Facebook Vice president respond to the controversy saying that:

In many situations, there is no obvious distinction between nudity or violent pictures that have global and historical relevance and those that do not. Some images may be objectionable in one part of the globe but acceptable in another. Even with a clear standard, checking millions of postings one by one every week is tough. (Gillespie, Tarleton. 2018)

Content Moderation

The term “content moderation” refers to the process of checking and processing the content uploaded on a website in accordance with relevant national legislation and company-related system requirements. As the internet is developing, many people like to post posts on social media, moderate started to become important. Content moderation is a way to protect our internet environment.

Moderation is hard

“The Terror of War” and Facebook case, many people believe that Facebook made the incorrect decision. Ut’s photographs are not just historically and emotionally significant. Put aside about the responsibility is whether Facebook’s manage or the content of the photo. Content moderation is hard to manage, as the Vice president of Facebook has stated in different places the acceptability of the content is different. The most challenge is to make choices between two sides. It requires making decisions between untenable distinctions (Gillespie, Tarleton. 2018) Those responsible for content policy are often motivated by the worst offensive behaviour and are required to take responsibility. Improper management might trigger abuse of information.

Companies like Facebook and YouTube invest heavily on content moderation, employing thousands of people and utilising sophisticated automated systems to detect and remove inappropriate content. However, one thing is certain: the resources devoted to content moderation are unfairly divided. Users frequently remark that regulating women’s body draws greater attention to potentially damaging statements.

However, the moderation system is intrinsically incoherent. Because it is based mainly on community policing—that is, individuals reporting other people for actual or perceived infractions of community standards—some users are going to be harmed more severely than others. A user with a public profile and a large number of followers is theoretically more likely to be reported than a user with fewer followers. When one corporation removes a public personality, it might set off a chain reaction in which other companies follow suit.

Importance of content moderation and Why should we moderate

1. Maintain national and social security and stability by strictly preventing cyber information crimes.

With the fast growth of the Internet, many criminals have used Internet information platforms to conduct different crimes, such as the selling of banned products, the supply of unlawful services, the convening of group occurrences, and various Internet scams. Strictly monitoring Internet information security, timely processing of prohibited content, and giving clues to national security authorities have become essential for website information assessment.

2. Ensure the accuracy of website content and give a guarantee for website development.

The control of the whole website information ensures that the website offers high-quality material, that the number of users on the website is continually grown, and that the happiness of the website users is enhanced, therefore increasing the overall value of the website.

Government role in content moderation

Initially, the Internet was envisioned as a secure public forum for exchanging ideas on news, religion, and politics. However, residents in certain nations do not have unrestricted access to the media. Governments could be an effective role in content moderation if they manage properly. They could monitor the company but should not control it. China has become one of the examples of too much control. The internet in China is fully controlled by the government, what can be seen by people and what can’t. Many new generations in China even have no idea of what Facebook and Instagram is.

As the government is involved in content moderation, there will also be many challenges. The example of “Napalm Girl” exemplifies the difficulty moderators confront in determining whether a post is suitable or inappropriate. When the government begins to regulate negative content, it will have to commit substantial resources to examine problematic content and making multiple judgement calls, some of which will undoubtedly arouse public indignation.

Internet is made for people to share information. The control of the government might change the meaning of the internet. Social media platforms are built to encourage involvement and, as a result, income. Unfortunately, the most engaging content is also polarizing and controversial. This indicates that the platform’s algorithms frequently cause issues.

As mentioned above about how the moderation system is intrinsically incoherent. With government involving in content moderation may lower the voice of ours and at the same time enlarge the voice of others, such as United State former president, Donald Trump. While his posts on Twitter continue to draw attention, people are particularly shocked to see Trump’s multiple posts about threatening North Korea with nuclear war. None of his posts was blocked at that time, although Twitter’s policies explicitly state that users must not threaten violence against any group of people. Citizens should expect every post, regardless of the poster’s rank, to be reviewed similarly if government participation is moderation.

Considering the internet is a new community that is different from the real society, content moderation is a right, as well as an obligation for the content moderator such as the big technology companies like Google and Facebook. The influence of these big companies has been spread worldwide, for example, Google and Facebook resisted the Australian Government for paying news publishers for the content shared on their platforms. What is more, profit is the purpose of a company, if a company has too much power in one field, it will lead to the monopoly, to be more specific, in the field of online content, if a company only moderate to post the content which is a benefit to itself and hide, or even distort the facts, it will result in the chaos and loss of creativity on the internet.

Anti-monopoly and content moderation are the two core aspects when people criticize the excessive power of the content moderators. Governments could play an important role in supervising the content moderators. Through the big technology companies may worry about the consequence of a divided internet, governments could cooperate to supervise and legislate or establish rules or principles for the content moderators. If the supervision could be appropriate, the benefit would be promoting creativity, enhancing competitiveness and helping the small companies to develop.

The importance of content moderation in providing a secure social media experience cannot be overstated. Those who control our material, on the other hand, are likely to restrict our ability to exchange ideas. The answer to should the government have a greater role in content moderation is uncertain. Should we trust the government to take control in a way that is fair for the user and the company?

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

References

Gillespie, Tarleton. (2018) All Platforms Moderate. In Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media. Yale University Press. pp. 1-23.

Gillespie, Tarleton (2017) ‘Governance by and through Platforms’, in J. Burgess, A. Marwick & T. Poell (eds.), The SAGE Handbook of Social Media, London: SAGE, pp. 254-278.

Massanari, Adrienne (2017) #Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3): 329–346.

Roberts, Sarah T. (2019) Behind the Screen: Content Moderation in the Shadows of Social Media. New Haven, CT: Yale University Press, pp. 33-72.

Samples, John, Why the Government Should Not Regulate Content Moderation of Social Media (April 9, 2019). Cato Institute Policy Analysis, No. 865, Available at SSRN: https://ssrn.com/abstract=3502843

South China Morning Post. (2019, April 25). How China censors the internet [Video].YouTube. https://www.youtube.com/watch?v=ajR9J9eoq34&t=6s

Vietnam war Pulitzer 1973 – phóng viên ảnh Huỳnh Công Út (Nick Út) của hãng thông tấn AP. (2021).