What is “Techlash”?

“Techlash” refers to the growing public, government, and political backlash against big media and technology companies in the age of the Internet. For example, Facebook, Amazon, Apple, Google, and so on have monopolized the digital platform industry; The proliferation of fake news on the platform and alleged manipulation of political elections; Disclosure of personal information and abuse of market power; Such undesirable trends as platform terrorism and hate speech should be addressed and punished by relevant policies(Flew, Martin&Suzor,2019,p.34) This article mainly explains the causes of technology shock in detail from two media issues of public concern: Advertising affects the user experience and search algorithms for online data. Moreover, discuss solutions from three aspects: the company, the government, and the public.

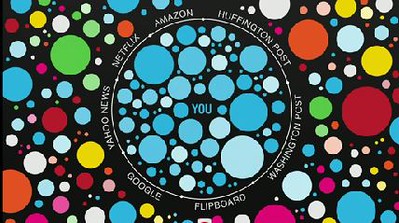

First, it’s important to know that in the new media era there are several major companies and almost monopolistic trends: FAANG (Facebook, Apple, Amazon, Netflix, Google) where Google controls almost 90% of AD searches.

Google has a strong market position, and some advertising companies may cooperate with Google to promote their companies through interest and buy clicks to move their products to eye-catching positions in the search bar so that more users can have the opportunity to see them. As a result, the ranking of search results is largely related to advertising costs, AD rankings, which use an AD rating formula to calculate points based on things like bid and quality, can be displayed at the top of search results, which greatly limits the user’s vision and can not search for really useful information. (Google help,2021). Meanwhile, the proliferation of more and more fake news and disturbing terrorist remarks on social software is affecting the peaceful development of social media.

Secondly, filtering bubbles and algorithms is one of the public concerns. When users visit the website, the algorithm of the system will automatically track the content you like to click and recommend similar content you are interested in. Although this provides convenience to users, you can only see information with the same values and preferences as you in the software after a long time of use. On the contrary, other information with different contents and values contrary to users’ opinions will be excluded from the filter bubble, isolating users from the diversified information network and leaving no more space to accept, explore and think about new content. When the information received is simplified, users are unable to make an informed judgment and it is difficult to have a meaningful discussion of the facts. Such algorithms lead to a lack of understanding and an unwillingness to consider the problem of opposing views and adverse information. (Digital Media Literacy,2021).

To what extent can these concerns be addressed by governments, by civil society organizations, and by the technology companies themselves?

-

Government

The obvious advantage of government supervision of Internet companies is a good external supervision system, which can make the rules of enterprises implemented through relevant laws, with stronger execution and more effective supervision ( Flew, Martin& Suzor,,2019).The government has already implemented region-specific policies on Google advertising so that companies can know in advance what laws they need to comply with within their advertising areas. For example, cosmetic surgery is banned in Hong Kong. Canadian pharmacy advertising is prohibited in the United States. And the reasons for illegal takedowns will be publicly and transparently announced. To ensure a safe and enjoyable experience for users, Google’s advertising forbids counterfeit, dangerous, and deceptive products; Prohibit misuse of user information, adult content, and alcoholic beverages; Political content, and financial services. Ensure that search ranking ads are healthy and of high quality(Google-Privacy Policy,2021). However, the speed of the government’s regulatory action is not as fast as the self-regulation of platform companies, and there are limitations in the policies and regulations of multinational companies, and many regulations cannot be agreed upon and implemented( Flew, Martin& Suzor,,2019).

-

Technology companies

To meet the laws and policies, avoid losing users offended by bad information, maintain the corporate image, and respect the ethics of institutions, more and more platforms have decided to take on the responsibility of supervision. Self-regulation can more effectively discover the problems of enterprises and take targeted measures to solve them, and consumers can be protected faster.

However, compared with the public value, the ultimate goal of an enterprise is to make profits through the operation. Most enterprises will not lose their wealth to safeguard the interests of citizens. In the absence of an incentive mechanism for self-regulation, there is no stable self-regulation execution, and it is difficult to ensure fairness and justice. Even if an enterprise has good supervision ability, it is to prevent the loss of customers and maintain its interests. (Flew, Martin& Suzor,2019) Secondly, social media in the US is protected by “safe harbor” regulations, but not in other jurisdictions. Global companies have different content regulation requirements in the US and international operations, resulting in the uneven allocation of regulatory resources.

Platforms should also pay attention to the problem of filter bubbles. Citizens should have a diversified and global worldview. Public social platforms with civic missions will integrate unfamiliar perspectives and expand our horizons. Platforms should censor the algorithms of Internet platforms so that citizens can see a fair view. (Piore,2020) Platform owners personalized algorithm should be alert to problems, if properly used, of course, also can become break filtering bleb of weapons, such as in the list of recommended like to add some “neutral” perspective, the content of the inconsistent with the user preferences, to give users receive the power of the diversification of the world, by pushing the public information, make broad population receives the information of public value.

-

Civil society

The more people participate in the action of information supervision on social media platforms, the greater the collective consciousness of citizens and the greater the public opinion’s support for supervision. And citizens can gather diverse forces to discuss different industries.About the problem that filter bubbles make Internet users isolate other views, make their views and beliefs more and more independent, and polarize each other’s views. Citizens themselves can avoid filtering bubbles in several ways: using ad-blocking browsers; Read education news that offers a wide range of perspectives; Use private browsing, delete history; Delete or block browsers, and so on(Encrypt,2019). However, civic organizations can only do advocacy and suggestions, and the cooperation between technology companies and the government is required to jointly supervise the platform to fundamentally improve the problem.

Conclusion

The size of social media users and the fragmentation of cultures across time zones make platform governance more complex and subject not only to legal constraints but also to the laws of other countries with commercial interests. The fundamental purpose of self-regulation of social media companies is to focus on their business interests, which requires incentives and government participation and supervision. At present, due to the global nature of social media and the different regulatory requirements of large multinational companies, individual governments in relevant regions cannot completely solve the problems arising from cross-platform, and national policies conflict with each other, resulting in slow implementation and discovery time. Internet platforms themselves should be more conscientious, manage platform speech more efficiently, abide by the law, and be open and transparent(Flew, Martin& Suzor,,2019). What users can control is the type and direction of incoming information, but the content of incoming information is not controllable. If they want to experience more diversified information, they need to go out on their initiative.

Reference list

Digital Media Literacy: How Filter Bubbles Isolate You. (n.d.). GCFGlobal.Org. Retrieved October 13, 2021, from https://edu.gcfglobal.org/en/digital-media-literacy/how-filter-bubbles-isolate-you/1/

Encrypt, S. (2019, May 31). What Are Filter Bubbles & How To Avoid Them [Complete Guide]. Choose To Encrypt. https://choosetoencrypt.com/search-engines/filter-bubbles-searchencrypt-com-avoids/

Flew, T. Martin, F. & Suzor, N. (2019) Internet regulation as media policy: Rethinking the question of digital communication platform governance. Journal of Digital Media & Policy, 10(1), 33–50.

Google Help. (n.d.). Google. Retrieved October 13, 2021, from https://support.google.com

Google-Privacy Policy. (2021). Google Ads policies – Advertising Policies Help. Google. https://support.google.com/adspolicy

Piore, A. (2020, April 2). Technologists are trying to fix the “filter bubble” problem that tech helped create. MIT Technology Review. https://www.technologyreview.com/2018/08/22/2167/technologists-are-trying-to-fix-the-filter-bubble-problem-that-tech-helped-create/

本作品采用知识共享署名 4.0 国际许可协议进行许可。