Is technology a tool or weapon?

The role of digital and media technology platforms has undergone remarkable transformation as they impact and penetrate every sector in society. Following an era of techno-optimism where digital technology and social media aligned with the democratic good, we now find ourselves amid the techlash era (Owen, 2019). Techlash refers to the public’s growing discontent with privacy violations, dissemination of misinformation, monopolistic actions and strategies of big technology companies, notably the Big Five – Facebook, Amazon, Google, Apple and Microsoft (FAGMA) following the 2013 Snowden revelations, the 2016 and 2018 incidents involving social media interferences in political campaigns and elections, and a suite of antitrust violations (Birch, Cochrane & Ward, 2021; Flew, Martin & Suzor, 2019). Increasingly, the platform web comprises privately owned spaces, governed by the commercial incentives of private actors and rooted in neo-liberal market values, at the expense of broader public interests (Owen, 2019). The techlash phenomenon serves as the fuel demanding greater regulation of digital platform companies and raises questions surrounding the inadequacies of existing media policy and regulation.

“Big tech’s power comes in the form of small, yet constant, behavioural nudges… these companies permeate fundamental aspects of our lives.” (Heaven, 2018, p. 28)

Contributors

The Big Five serve billions of users globally, but importantly act as the gatekeepers to online social traffic and economic activities, and influence the very texture of society and political democracy (Dijck, 2020). FAGMA’s market valuation rose by 52% between 2019 and 2020, increasing by approximately $2 trillion in the year (Birch et al., 2021). Google and Facebook direct 70% of all web traffic, with Google governing nearly 90% of search advertising and Facebook controlling 80% of mobile social traffic (Kolbert, 2017).

“These Tech Giants Seem Unstoppable” by Bloomberg Quicktake. All rights reserved. Retrieved from: https://www.youtube.com/watch?v=3cuILtoQbZE

Public concerns

The increasingly toxic nature of digital public platforms have only accelerated the negative shift in public sentiment and trust toward technology companies (Flew, 2021; Flew & Gillett, 2020; Owen, 2019). Notably, the main public concerns include the amplification of misinformation, the declining reliability of information, and monetisation of personal data and monopolisation of the digital economy by a select few.

Social

Algorithmic power and lack of content moderation characterise the online media ecosystem and have contributed to the spread of hateful and extremist content. The recent online public shocks such as the live streaming of the Christchurch Mosque massacre on Facebook Live emphasised the failures of content moderation policies in eliminating acts of violence online (Graham & MacLellan, 2018). Particularly, there is a lack of transparency into how data flows, and how algorithm mechanisms direct and impact the visibility of certain content to influence behaviour (Dijck, 2020). Algorithms embedded within platforms create filter bubbles and echo chambers where users are directed to certain types of information and news, often fake, shocking and radical in nature (Siurala, 2020).

Aftermath of Christchurch shootings

“Memorial” by Nick in exsilio is licensed under CC BY-NC-SA 2.0

Political

The 2016 and 2018 political scandals epitomised the misuse of personal data, highlighting the privacy breaches and security leaks located within social media networks. Following the 2016 United States (US) Presidential election, Facebook discovered a Kremlin-backed Internet Research Agency had planted approximately 3,000 ads on Facebook and Instagram privy to 10 million people to influence the election results (Atkinson et al., 2019). In 2018, Cambridge Analytica extracted and utilised over 90 million Facebook users’ data to influence the 2016 UK Brexit referendum and Donald Trump’s campaign (Flew, 2018; Flew & Gillett, 2020). These recurring data security breaches raised concerns about the circulation of fake news and the abuse of personal data for third parties’ benefit (Flew, 2018).

Cambridge Analytica – Facebook scandal

“Cambridge Analytica Facebook” by Book Catalog is licensed under CC BY 2.0

Economic

FAGMA’s monopolistic behaviour result in economic costs of market distortion and raised barriers to entry, impacting innovation and furthering the inequality gap. FAGMA engage in ‘killer’ acquisitions, buying smaller potential competitors to inhibit their innovative development projects (Siurala, 2020). For example, in 2012, Facebook acquired Instagram for $1 billion because it noted that user engagement had surpassed other social media sites (Birch et al., 2021), following in 2014, Facebook acquired WhatsApp for $22 billion (Smith, 2018). The ubiquity of platform companies in the marketplace create vulnerabilities that magnify the Big Five’s control have over advertising, distribution channels and the curation of information (Owen, 2019).

In October 2020, the US Congress released an investigative report revealing how big technology firms have exploited their control over digital ecosystems and data to advance their market power (Birch et al., 2021). Following, the US Department of Justice announced its intention to sue Google for violating antitrust laws. The European Commission has already instigated investigations regarding Google’s violation of antitrust legislation. Google was fined €2.24 billion for giving its own shopping service prominent placement and demoting rival services. Google was fined a further €1.49 billion for preventing third-party and competitor websites from sourcing search ads (Schneider, 2020).

While platforms are pervasive in everyday life, the governance across the scale of their activities is ad hoc, incomplete and insufficient.” (Fay, 2019, p. 27)

Redress

Techlash has hastened growing calls for proposals to improve the governance of digital platforms. Historically, companies like Facebook, Google and Twitter have successfully bypassed regulation, public accountability and responsibility by claiming their exceptional status – positioning themselves as neutral intermediaries; facilitators that connect users to content (Dijck, 2020; Napoli & Caplan, 2017). Platforms evade a host of regulations such as Section 230 of the US Communications Act of 1996 which acts as a safe harbour provision that provides immunity from being held legally liable (Flew, 2018; Gillespie, 2017; Popiel & Sang, 2021).

The EU’s regulatory model aims to limit the power of companies, pioneering an activist stance compared to the light-touch regulation of other countries like the US. The EU’s General Data Protection Regulation (GDPR) laws implemented in May 2018 monitor information usage and imposes platform transparency through its array of enforcement and punitive powers (Flew et al., 2019; Heaven, 2018). The GDPR emphasises principles of informed consent for data collection, an individual’s right to transparent information, and data safety (Schneider, 2020).

EU’s regulatory discussions

“Justice and Home Affairs Council” by Council of the EU is licensed under CC BY-NC-ND 2.0

Further, platforms have taken initiative in response to the increasing techlash pressure. Google has begun a clean-up effort to stop controversial and fake news videos on YouTube, introducing stricter criteria for videos and filtering popular content through monitoring its ‘violative view rate’ statistic (Heaven, 2018; Heilweil, 2021). Facebook established an Oversight Board in May 2020 to oversee appeals of Facebook’s content moderation decisions and combat terrorism and violent extremism content (Flew, 2021; Gorwa, 2019a). The Board comprises 11-40 members from politics, civil society organisations, the media and universities and as a collective, will set standards that govern the distribution of harmful content (Flew & Gillett, 2020). The effectiveness of platform self-regulation is yet to be determined.

“Facebook – The Oversight Board” by Nomad Creative Studio. All rights reserved. Retrieved from: https://vimeo.com/416491971

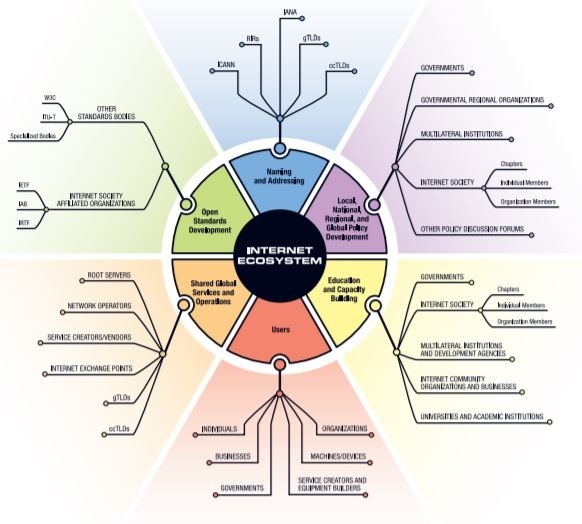

Gorwa (2019b) suggests a third-way governance framework that finds a middle ground between self-regulation and stringent external oversight like the GDPR. This multi-stakeholder approach focuses on the interests of multiple groups in the policy-making process involving governments, private companies, educators and ordinary citizens (Graham & MacLellan, 2018). Ideas of co-regulation balance public and private powers, allowing regulators to set up general rules and laws that the industry can oversee, with oversight from government and civil independent bodies (Flew, 2018; Flew & Gillet, 2020). Hemphill (2019) envisages leading organisations in the industry would mutually define principles to abide by and an Independent Regulatory Body would then oversee and regulate compliance. This shared governance models denotes an orientation toward inclusiveness, democracy and equality. Such arrangements would ensure “responsiveness to the rapid pace and development of the platform ecosystem, as well as the dynamic nature of the platform companies” (Gorwa, 2019b, p. 12).

“Digital companies should have a seat at the table in the development of the rules rather than having them force-fed” says former US Federal Communications Commission Chairman Tom Wheeler (Wheeler, 2020)

Multi-stakeholder approach

“The Internet’s governance as an ecosystem” by Internet Society. All rights reserved. Retrieved from: https://www.internetsociety.org/resources/doc/2016/internet-governance-why-the-multistakeholder-approach-works/

Future of governance

Technology companies were once viewed as positive tools of change. However, post-2010s, this optimistic tone has taken a darker turn, with significant attention placed on the weaponisation of technology through the erosion of privacy rights, lack of substantial content moderation, data breaches and the monopolisation of the digital economy. FAGMA are titled ‘Digital Overlords’ wielding too much power and control (Atkinson et al., 2019). The scope and vociferousness of techlash in the current digital and technological climate has sparked both policy and public condemnation. Indeed, the future policy response is riddled with uncertainty and challenges. However, for the internet to remain a democratic and open space, it requires the coordinated multi-stakeholder effort that involves and values government, technological companies, civil organisations and citizens – all who are jointly responsible and accountable for governing the digital ecosystem.

Fuel behind the fire: How techlash has sparked calls for platform governance © by Angele Yan is licensed under a CC BY-NC-ND 4.0

Reference List

Atkinson, R., Brake, D., Castro, D., Cunliff, C., Kennedy, J., & McLaughlin, M. et al. (2019). A policymaker’s guide to the “Techlash” — What is is and why it’s a threat to growth and progress. Information Technology And Innovation Foundation, 1-56. Retrieved 10 October 2021, from https://itif.org/publications/2019/10/28/policymakers-guide-techlash

Birch, K., Cochrane, D., & Ward, C. (2021). Data as asset? The measurement, governance, and valuation of digital personal data by Big Tech. Big Data & Society, 8(1), 205395172110173. https://doi.org/10.1177/20539517211017308

Dijck, J. (2020). Governing digital societies: Private platforms, public values. Computer Law & Security Review, 36, 105377. https://doi.org/10.1016/j.clsr.2019.105377

Fay, R. (2019). Digital platforms require a global governance framework. Models for Platform Governance. Retrieved 10 October 2021, from https://www.cigionline.org/articles/digital-platforms-require-global-governance-framework/

Flew, T. (2018). Platforms on trial. Intermedia, 46(2), 24-29. Retrieved 6 September 2021, from https://eprints.qut.edu.au/120461/

Flew, T. (2021). Communication futures for internet governance. SSRN, 1-13. https://doi.org/http://dx.doi.org/10.2139/ssrn.3806967

Flew, T., & Gillett, R. (2020). Platform policy: Evaluating different responses to the challenges of platform power. In International Association for Media and Communication Research (IAMCR) Annual Conference (pp. 1-19). Sydney; The University of Sydney. Retrieved 10 October 2021, from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3628959

Flew, T., Martin, F., & Suzor, N. (2019). Internet regulation as media policy: Rethinking the question of digital communication platform governance. Journal Of Digital Media & Policy, 10(1), 33-50. https://doi.org/10.1386/jdmp.10.1.33_1

Gillespie, T. (2017). Regulation of and by platforms. In J. Burgess, A. Marwick & T. Poell, The SAGE Handbook of Social Media (1st ed., pp. 254-278). SAGE. Retrieved 6 September 2021, from http://dx.doi.org/10.4135/9781473984066.n15

Gorwa, R. (2019a). What is platform governance?. Information, Communication & Society, 22(6), 854-871. https://doi.org/10.1080/1369118x.2019.1573914

Gorwa, R. (2019b). The platform governance triangle: conceptualising the informal regulation of online content. Internet Policy Review, 8(2), 1-22. https://doi.org/https://doi.org/10.14763/2019.2.1407

Graham, B., & MacLellan, S. (2018). Governance innovation for a connected world: Protecting free expression, diversity and civic engagement in the global digital ecosystem (pp. 1-19). Stanford: Centre for International Governance Innovation. Retrieved from https://fsi-live.s3.us-west-1.amazonaws.com/s3fs-public/stanford_special_report_web.pdf#page=44

Heaven, D. (2018). Techlash. New Scientist, 237(3164), 28-31. https://doi.org/10.1016/s0262-4079(18)30259-8

Heilweil, R. (2021). YouTube says it’s better at removing videos that violate its rules, but those rules are in flux. Vox. Retrieved 10 October 2021, from https://www.vox.com/recode/2021/4/6/22368809/youtube-violative-view-rate-content-moderation-guidelines-spam-hate-speech

Hemphill, T. (2019). ‘Techlash’, responsible innovation, and the self-regulatory organization. Journal Of Responsible Innovation, 6(2), 240-247. https://doi.org/10.1080/23299460.2019.1602817

Kolbert, E. (2017). Who owns the internet?. The New Yorker. Retrieved 10 October 2021, from https://www.newyorker.com/magazine/2017/08/28/who-owns-the-internet

Napoli, P., & Caplan, R. (2017). Why media companies insist they’re not media companies, why they’re wrong, and why it matters. First Monday, 22(5), 1-12. https://doi.org/http://dx.doi.org/10.5210/fm.v22i15.7051

Owen, T. (2019). Introduction: Why platform governance?. Models for Platform Goverance. Retrieved 10 October 2021, from https://www.cigionline.org/articles/introduction-why-platform-governance/

Popiel, P., & Sang, Y. (2021). Platforms’ Governance: Analyzing Digital Platforms’ Policy Preferences. Global Perspectives, 2(1), 1-13. https://doi.org/10.1525/gp.2021.19094

Schneider, I. (2020). Democratic governance of digital platforms and artificial intelligence?. Ejournal Of Edemocracy And Open Government, 12(1), 1-24. https://doi.org/10.29379/jedem.v12i1.604

Siurala, L. (2020). What are the new challenges of digitalisation for young people? (pp. 1-43). Finland: European Union–Council of Europe Youth. Retrieved from https://static1.squarespace.com/static/5f3a322a5e2b1503a4735e52/t/60cd770249c9d74d1b5a0c50/1624078085580/Techlash+LS+12-11-2020+LP.pdf

Smith, E. (2018). The techlash against Amazon, Facebook and Google—and what they can do. The Economist. Retrieved 10 October 2021, from https://www.economist.com/briefing/2018/01/20/the-techlash-against-amazon-facebook-and-google-and-what-they-can-do

Wheeler, T. (2020). COVID-19 has taught us the internet is critical and needs public interest oversight [Blog]. Retrieved 10 October 2021, from https://www.brookings.edu/blog/techtank/2020/04/29/covid-19-has-taught-us-the-internet-is-critical-and-needs-public-interest-oversight/