This work is licensed under a Creative Commons Attribution 4.0 International License.

The power of digital platforms has reached unprecedented heights.

Beneath the surface lies a system designed to manipulate human behaviour.

‘The Social Dilemma’

The documentary directed by Jeff Orlowski unpacks the perils of social networking by examining how manipulative algorithms disregard public values.

This essay will explore the key matters unravelled in The Social Dilemma (2020) surrounding platform business models, algorithmic manipulation and misinformation to investigate how users are exploited. This will be situated in the context of the internet, its history, power relations and the future. Contradictory messaging in the film will also be addressed due to commentary flaws.

Platform Business Model

The impact of tech elites’ unfettered power is derived from platform-based business models. This is the most prevalent issue in the internet’s transformation because the film indicates how social values will erode for as long as platforms are fuelled by data.

The voices of former platform executives are interweaved to reveal how the extraction of user information under the “datafication business model” is exploitative.

Tristan Harris outlines three goals associated with the tracking of user behaviour:

- Engagement – increase usage through endless scrolling

- Growth – addictive app features

- Advertisement – generate revenue through targeted advertising

Therefore, platforms should be considered as corporate entities, not egalitarian spaces. Van Dijck et al. (2018) explore how these goals are powered by algorithms. Business models in the context of platforms are linked to how profit is acquired through capturing user attention. This aligns with the film’s messaging about monetary incentive as the “public value of civic engagement” is neglected by infrastructures that serve the interest of the operator (Van Dijck et al., 2018, p. 22).

In the film, Shoshana Zuboff describes these practices as surveillance capitalism — the extraction of users’ activity data to make marketable predictions. The asymmetrical power of privatised companies is enhanced through the opaque designs of self-regulated platforms. Therefore, the appropriation of behavioural data without explicit permission has a profound impact on individual autonomy and decision rights (Zuboff, 2019).

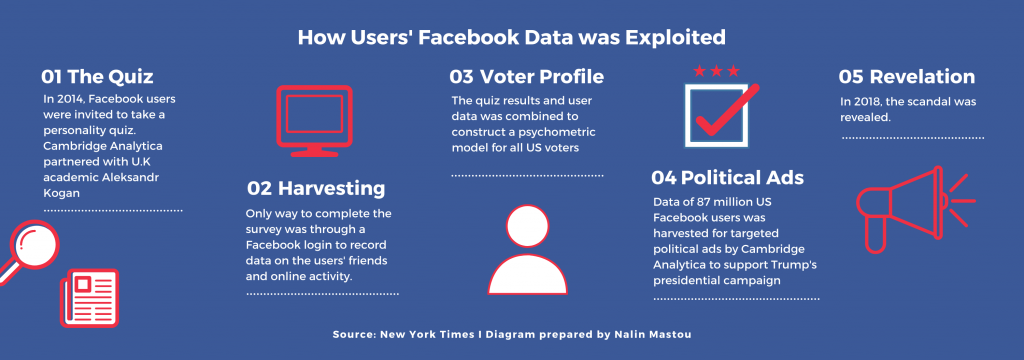

Case Study 1: Cambridge Analytica

Criddle (2020) reports on how the research firm harvested the Facebook data of 87 million users during the 2016 US Presidential Election to display targeted political advertisements.

Data proved to be the most valuable strategic asset under the platform business model as voters’ behaviour was influenced. Zuboff (2019) asserts how the manipulations of Cambridge Analytica normalised the practices that are now standard operations for surveillance capitalists.

Selling personal information through mass surveillance is a major social dilemma as user agency is undermined. It challenges early ideas of the internet as a space for creative destruction. Berners-Lee (2019) identifies the profit-driven model as a major disruptor of today’s web. He stresses the need for companies to consider human rights in the design of their products as commercialisation disregards the principles of freedom and openness that early internet pioneers valued.

Algorithmic Manipulation

The psychology of persuasion is built into platforms through manipulative algorithms to modify human behaviour.

This is effectively revealed in the documentary to expose how internet culture and the meaning of communication in the modern age is manipulation:

“We’ve put deceit and sneakiness at the absolute center of everything we do.”

Jaron Lanier – The Social Dilemma

Orlowski unpacks the ethical and moral ramifications through narrative drama. Platform employees act as algorithms who observe a teenager’s online engagement to drive monetisation through behavioural modification techniques. This suggests how the lack of individual autonomy and user addiction results from privacy-intrusive surveillance.

Thus, the historical idea of a tools-based technology environment is contested as digital platforms have led to an addiction and manipulation-based system.

This echoes the findings of Van Dijck et al. (2018) who challenge the idea of platforms as tools for activities such as texting, dating, shopping and content viewing. Over time these practices alter user experience and shape society as the impact of algorithms occur on a macro-level.

Revilgio & Agosti (2020) examine how algorithmic manipulation overpowers users and exploits human weaknesses. Platforms are designed to drive addiction through manipulative strategies with constant A/B testing to determine which message triggers a reaction (Revilgio & Agosti, 2020). This links to the notion of positive intermittent reinforcement raised in the film, where emotional highs are triggered by the unpredictability of a platform.

Case Study 2: Facebook’s “I Voted”

Zuboff exposes how Facebook used nudging techniques through the “I Voted” visual cue during the 2010 US Midterm Elections. Facebook realised it could modify human behaviour and emotions as they increased voter turnout by 340,000 —without triggering users’ awareness (Lind, 2014)

Algorithms’ overt persuasion and covert manipulation are undoubtedly exploiting weaknesses in human biology. To combat this, Revilgio & Agosti (2020) introduce the notion of ‘algorithmic sovereignty’ to establish user participation in algorithmic decision-making as a moral right.

This concept should’ve been explored in the film as public algorithms could decentralise the monopolistic power of digital platforms and guarantee democratic oversight (Revilgio & Agosti, 2020)

Meaningful input from public institutions and civil society is the most logical way to overturn the use of persuasion and manipulation to secure algorithmic sovereignty and fulfil the original promise of the web.

Misinformation

The rapid circulation of fake news and conspiracy theories from the exploitative use of digital technology threatens society. The film successfully advances this message to inform viewers on how uncivil and manipulative behaviours pose an existential threat to humanity.

“Algorithms…are…getting so good at creating fake news that we absorb as if it were reality…It’s as though we have less control over who we are and what we really believe.”

Justin Rosenstein – The Social Dilemma

Therefore, platforms gain profit and power when participation is driven by hate, anxiety and fear. This is the most prevalent and visible issue as we live in an age where information is plentiful but attention is scarce.

This aligns with Stark and Stegmann’s (2020) findings who suggest how increasing polarisation is the most efficient way for platforms to keep users engaged. Under algorithmic governance, content is filtered based on popularity, not journalistic standards (Stark & Stegmann, 2020). Thus, private internet companies are motivated by economic incentives to serve the interests of advertisers.

The film shows footage of chaos and culture wars to demonstrate how digital technologies are propagating tribalism and devaluing truth. This is an important issue explored by Sasahara et al. (2020) as social media form echo chambers where people only engage with information that confirm their biases. Confidence in minority views, such as conspiracy theories and fabricated news is heightened as individuals aren’t exposed to belief-inconsistent information (Sasahara et al., 2020).

Sasha Cohen’s (2019) speech at the Anti-Defamation League uncovers how platforms that amplify content that provoked negative emotions has led to division and polarisation.

Video by IndraStra Global. https://youtu.be/P4f7lBcX50k

The film uncovers the fake news scandal Pizzagate where a child trafficking ring was falsely believed to be operating in a pizza joint. This propagated through Facebook’s recommendation engine inside echo chambers of users susceptible to believing conspiracy theories and alternative information sources.

This exposes how algorithmic culture threatens public discourse as users are compelled to communicate with like-minded people, without questioning the legitimacy of such narratives.

Pew Research Center (2017) expresses concerns for the future as fragmentation threatens the vision of the internet’s original creators. It was envisioned as a space for the open exchange of ideas. Yet now people only engage with forums that mirror their thinking, resulting in an echo effect.

While the film calls for a radical restructuring with greater regulatory oversight and state surveillance, this could be more harmful as dominant institutions and government actors may censor certain ideas and block access to alternative news sources (Pew Research Center, 2017).

Contradictory Messaging

The paradoxical nature of the film is apparent in the commentary as the prominent speakers are white male tech entrepreneurs who claim they weren’t aware of the downsides of the platform model and algorithms. This reinforces the Silicon Valley ethos of individualism as the innocent force driving innovation to improve the world (Castells, 2001).

But not everyone’s experience online is equal. The exclusion of voices beyond the Valley signifies a core problem that Noble (2018) raises about who produces technology. Ideologies embedded in platforms enact new modes of racial profiling, evident in the sexualisation of black women in search engines (Noble, 2018). Thus, the absence of marginalised voices in the film when discussing algorithmic bias is contradictory.

Conclusion

Nevertheless, the film is highly effective in exposing the threat that data mining, manipulative algorithms and misinformation pose to civilisation. Early utopian prospects of the web are offset by commercialisation and concentrations of platform power. Thus, the future state of democracy is at risk if platforms continue to prioritise advertisers’ interests.

References

Berners-Lee, T. (2019, March 12). 30 years on, what’s next #ForTheWeb. World Wide Web Foundation. https://webfoundation.org/2019/03/web-birthday-30/

Castells, M. (2001). The Culture of the Internet. In M. Castells (Ed.), The Internet Galaxy: Reflections on the Internet, Business and Society (pp. 36-63). Oxford University Press.

Criddle, C. (2020, October 28). Facebook sued over Cambridge Analytica data scandal. BBC News.

Lind, D. (2014, November 4). Facebook’s “I Voted” Sticker was a secret experiment on its users. Vox.

Noble, U. S. (2018). A society, searching. Algorithms of Oppression: How Search Engines Reinforce Racism (pp. 15-63). New York University Press.

Orlowski, J. (Director), & Rhodes, L. (Producer). (2020). The Social Dilemma [Motion picture]. Exposure Labs.

Rainie, L., Anderson, J., & Albright, J. (2017, March 29). The Future of Free Speech, Trolls, Anonymity and Fake News Online. Pew Research Center.

Revilgio, U. & Agosti, C. (2020). Thinking Outside the Black-Box: The Case for “Algorithmic Sovereignty” in Social Media. Social Media and Society, 6(2). 1-12. https://doi.org/10.1177%2F2056305120915613

Sasahara, K., Chen, W., Peng, H., Ciampaglia, L. G., Flammini, A., & Menczer, F. (2020). Social influence and unfollowing accelerate the emergence of echo chambers. Journal of Computational Social Science, 4(1). 381-402. https://doi.org/10.1007/s42001-020-00084-7

Stark, B., & Stegmann, D. (2020). Are Algorithms a Threat to Democracy? The Rise of Intermediaries: A Change for Public Discourse. Algorithm Watch. https://algorithmwatch.org/en/wp-content/uploads/2020/05/Governing-Platforms-communications-study-Stark-May-2020-AlgorithmWatch.pdf

The New York Times. (2018, April 9). How Cambridge Analytica Exploited the Facebook Data of Millions. [Video]. Youtube. https://youtu.be/mrnXv-g4yKU

Van Dijck, J, Poell, T. & de Waal, M. (2018). The Platform Society as a Contested Concept. In J. Van Dijck (Ed.), The Platform Society (pp. 5-32). Oxford University Press.

Zuboff, S. (2019). Surveillance Capitalism and the Challenge of Collective Action. New Labour Forum, 28(1), 10-29. https://doi.org/10.1177/1095796018819461