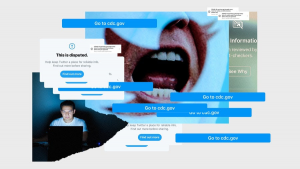

Figure 1 Content moderation illustration by Google (n.d.)

“Content moderation” is currently a common term, especially with the penetration of 3G and 4G networks because of the increased internet usage by both adults and children. It is the practice of applying a preset set of guidelines and rules to user-generated submissions to decide whether a communication is permissible or not. Traditionally, digital platforms have been subjected to less direct regulations given that they are intermediaries, in contrast to content creators such as newspapers (Ganesh & Bright, 2020). However, issues are arising for digital platforms with these regulations. If digital platforms are not monitored, scammers and ill-motive persons can impact the lives of persons who are not well versed with the internet. This article explores content moderations with issues arising, attempts and associated controversies, and the government’s role in enforcing it.

Various issues are arising for digital platforms with content moderation. First, the platforms are in their adolescence. Social and legal norms around speech have evolved over the years, but digital platforms and online speech are still developing. Most of the challenges digital platforms are facing are issues that have taken years to work out or are far from being resolved in other media fields. Specifically, the issue of free speech tradition is affecting most of the digital platforms. The platforms were not ready for how this issue would play out in different contexts, such as political, social, and legal (Stewart, 2021). Most platforms have taken a hands-off approach that has caused problems, but putting their hands on also causes problems.

Figure 2 PHOTO ILLUSTRATION: SAM WHITNEY; GETTY IMAGES

Secondly, the current arrangements for content moderation are flawed. Digital platforms are increasingly moving towards contracting thousands of moderators and using identification software. However, existing arrangements still have gaps and are unclear. While defamation, hate speech, and content that compromises children are regulated, there is a grey area of content that is not directly infringing regulations or rules but can be harmful. For example, misinformation medical campaigns during the Covid-19 pandemic can cause harm, but it is not directly stated for regulation. The arrangements also raise questions on how the speech of many is lost in ambiguity and a large-scale system where it can be difficult to be sensitive to culture, context, and language (Gillespie, 2020). For instance, what resources do digital platforms allocate in moderating the speech of many versus public figures like Donald Trump? Digital platform’s failure to take action against content that violates their policies has been the key cause of controversies.

Figure 3. Fake news illustration by PixelKult, 2016

It has also seemed impossible to filter inappropriate user-generated content (UGC) posted online. Digital platforms, including social media platforms such as Facebook, YouTube, Twitter, and Instagram, face this problem. Facebook, Twitter, and Instagram are encountered with similar UGC problems such as political and religious rants, hoax information, business advertisement scams, spam leading to malware, and online bullying on comment sections (Robyn Capln, 2018). YouTube, with over 1.86 billion subscribers, can affect and influence a viewer and have the potential to affect innocent viewers (Statista, 2021). Common UGC problems in YouTube include uncensored violent and brutal comments, viral videos aimed at attacking personalities, and discrimination and free speech leading to controversy. These problems are becoming catastrophic as they are difficult to control.

Figure 4. Apps illustration by PixelKult, 2016

There have been attempts to implement content moderation. First, there has been the development of policies. Policies are the documents that mix a broad value of statements such as and prohibitions against specific behaviors. They serve three purposes: informing people about the rules and outlining actions the platform will take in case of a policy violation (Jhaver, Ghoshal & Bruckman, 2018). Additionally, platforms also often have extensive, no-public content moderation documentation.

Secondly, there are various moderations such as manual, automated, and user moderations. Manual moderation is dependent on larger teams to review the content manually. Using the digital platform policies, the moderators remove content that does not comply. However, this system is not fully effective as there are some unclear areas, but over the last few years, there have been efforts to distinguish between permissible and impermissible content (Tehrani, 2021). Currently, it is the most efficient attempt to content moderation. The moderation occurs after the content has been posted “post moderation.” As users of digital platforms drive revenue, user experience is prioritized increasing, thus the need for post moderation.

Currently, there has been increased use of automated moderation due to technological advancements. It is based on machine learning and artificial intelligence techniques. In the past few months YouTube, Twitter, and Facebook have made public statements about their n strategies. While they differ in details, they all have a common element: the increased reliance on automated moderation. However, automated technology does not work to scale. It can work well for some languages but barely works overall (Gillespie, 2020). Most digital platforms acknowledged the shortcomings of automated moderation and decided to use it for moderation on purposes such as terrorism on Facebook. There are still ongoing challenges to accuracy on this moderation type.

User moderation is a form of human-based moderation. Donald Trump’s social media accounts’ recent blocking showed that moderation is not limited to automation (Tehrani, 2021). User moderation relies on the online community for regulation. This method allows users to have more control rather than letting an algorithm take control. There is a reporting function where content highlighted by users is passed to a review by internal teams in its basic terms. Digital platforms gain an economical perspective to process moderation without being seen to censor online content. Currently, platforms such as Twitter are headed in that direction of user moderation. Another form of user moderation is where platforms deploy their moderators. Reddit is a standard example where each content “subreddit” is scrutinized for spam by a volunteer within that online community. It is effective as it requires minimal investments and leverages the benefits of a human moderator who is familiar with the context to respond quickly.

Content moderation has sparked various controversies. Some argue that there is no economical gain from cutting individuals off for saying something harmful or incorrect; all the digital platform does is lose a client. The issue boils down to something close to impossible: asking a digital platform to put mortality over profit (Ganesh & Bright, 2020). These challenges are clustered, especially where different cultural norms and languages are involved. What sounds inoffensive when translated to English; could be hate speech in a different culture, and automated moderation cannot make such distinctions. Other issues include the harsh penalties for someone who shared something false without realizing it (Jhaver, Ghoshal & Bruckman, 2018). Additionally, who decides that something is hate speech or just a discordant opinion? Indeed moderation remains one of the most controversial issues on digital platforms.

Government should only have a greater role in enforcing content moderation restrictions on social media if it will do so fairly. Government involvement in content moderation may mute voices for some while it amplifies for others. In 2016, the Israeli government partnered with Facebook to monitor incitement on the platform. This move meant that the Israeli government could censor their Palestinian political opponents by restricting their assembly and journalists. Trump’s former US president shocked many by threatening North Korea with nuclear war.

Figure 5. Donald Trump photo by Paradise Ng, 2018

None of the tweets were blocked, which raises the question of whether government officials get a pass. China also has strict surveillance tools which censor all networks such as news, social media, or television within its borders (Dawson, 2020). As such, the Chinese government reserves the power and authority to oppress any publication that threatening it. By giving the government a greater role in enforcing content moderation restrictions would be silencing multitudes. Therefore, government involvement should be allowed only if the government acts fairly.

Content moderation is essential to ensure a safe digital platforms experience. However, several issues are arising for digital platforms with content moderation, such as current content moderation being flawed and unclear on some issues. It has also proven impossible to monitor all the user-generated content online. Nonetheless, there have been various attempts to implement content moderation, from policies to automation techniques. Controversies remain due to barriers such as cultures, languages at to some extent, the economical perspective. Lastly, the governments should only have a greater role in enforcing content moderation only if they will not leverage the opportunity to their advantage. There should be developments on clear distinctions on the regulations and laws governing content moderation.