With the development of the Internet, while the content of network platforms has exploded, a large amount of harmful and harmful information has also been generated, and content security has become an important part of Internet ecological governance. One of the indispensable links is to filter low-quality and vulgar content, so as to produce high-quality content and prevent degradation of user experience. However, the digital platform for content review also has many difficulties.

Issues arise for digital platforms with content moderation

The difficulty for platforms is determining when, how, and why to interfere. Where they draw the line between what is acceptable and what is not is very difficult(Tarleton, 2018). Not all content is wrong and right, it is difficult for all reviewers to adhere to the same set of guidelines and make consistent conclusions. The filtered content can basically be guaranteed to be harmless. Even if the quality is not high, there is no risk problem. At this time, the enterprise will face the choice of whether to issue the review first or issue the review first.

Because the content of the platform is intensive and ruthless, it is difficult to review the content, without knowing the absolute standard and may cause users to anger because of a failure, while ignoring the previous success(Tarleton, 2018). With the rapid increase in content production, the increase in user experience and the risk of negative events, and the strict and detailed audit standards, various non-standardized audit management systems are like a giant rock that overwhelms audit workers. Auditors not only need to bear a lot of nightwork but also browse a lot of bloody violence and other content that is harmful to physical and mental health. Some of them will have psychological problems after one or two months of work, so the personnel mobility is extremely high.

While the amount of content has skyrocketed, the content’s format is always changing. Audio, lengthy and short films, and live broadcasts are all becoming more popular in addition to traditional visual material. It is for those who pursue real-time (release speed and user experience) without problems (report rate and negative Event) content platform audit management, which posed huge and severe challenges. The illegal content coverage scene has reached the point of pervasiveness. The news content, user comments, user avatars, any scene where content is released is difficult to avoid the harassment of illegal content.

The antagonism of the illegal content is getting stronger and stronger, which is reflected in the certain organization and antagonism in the release of the illegal content, and the change of the content form and the change of the account are used to counter the detection or operation strategy. Platforms must moderate in some way, not only to protect users from other users, but also to protect the group from opponents, and at the same time to show a good side to advertisements, partners and new users(Tarleton, 2018).

Regardless of the specific regulation, the manner in which these platforms enforce their standards has implications. It matters whether the enforcement is in the form of a warning or removal and whether action is taken before or after someone complains(Tarleton, 2018). The platform will have a professional standard for the content as a reference. At this time, a big problem is that manual screening is inefficient and costly. And, regardless of how it is implemented, moderation takes a significant amount of time and effort: complaints must be handled, dubious material or behaviour must be evaluated, penalties must be applied, and appeals must be considered(Tarleton, 2018).

Establishing and implementing a content management system that can handle both extremes at the same time is one of the most difficult problems platforms confront(Tarleton, 2018). Those in charge of content regulation must be careful not to ban culturally significant material in the process, and the reviewers implementing those rules must keep sensitive judgment about what crosses a boundary(Tarleton, 2018). Even a self-governing online community has the problem of determining who should make the rules that apply to everyone, but as the community develops, the values of each user compete with each other and the challenges will increase.

“Creative Commons Keyboard Borders Image” by Nick Youngson, https://www.picpedia.org/keyboard/b/borders.html. Licensed CC BY 2.0 http://creativecommons.org/licenses/by/2.0.

Attempts to implement content moderation

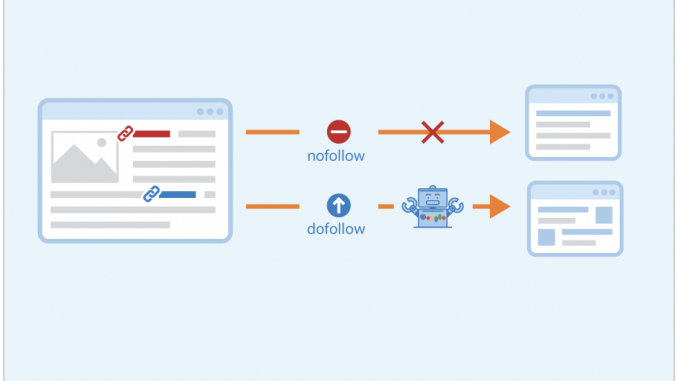

In recent years, with the continuous development of various cutting-edge technologies, the value of artificial intelligence technology in auditing has been well discovered. The application cost of AI is much less than that of manual labour and the audit efficiency is higher. However, although AI audit is efficient and cheap, due to the immature technological development and the level of intelligence is not high enough, there are still shortcomings in making some flexible judgments or processing. The method of dealing with this content using AI is frequently catch-as-catch-can, as the complicated process of sorting user-uploaded data into either the acceptable or rejected pile is well above the capability of software or algorithms alone(Sarah, 2019 ). Because artificial intelligence cannot accurately distinguish the right and wrong standards in images and videos, it is not subjective, and if there is content outside the setting, it cannot be judged. Machine-automated detection of pictures and video remains an extremely hard computational issue, the vast majority of social media information submitted by its users needs human interaction in order to be properly screened—especially when video or photos are involved(Sarah, 2019). We live in a completely different atmosphere. Diverse parts of the world have different political and cultural backgrounds, and they might alter on a daily basis; AI cannot manage such complicated dynamics. With the help of the development of artificial intelligence, machines can replace part of the review work, but due to the complexity of the content, manual review is essential. For a huge platform, artificial intelligence technology is far from completely replacing human beings or even a part of it. Content review is far more difficult than people think.

“Digital Analytics” by Seobility, https://www.seobility.net/en/wiki/Digital_Analytics. Licensed CC BY 2.0 http://creativecommons.org/licenses/by/2.0.

The role of the government in restricting content moderation

The government should not regulate the content review of social media. restricting and penalizing smaller platforms may shut them down because most platforms lack the financial resources of Facebook, Twitter, or Google(Dawson, 2020). In addition, a large amount of content review can cause marginalized groups to be silenced. According to Dawson(2020), governments may force Facebook to undermine minorities, which is terrible news for those of us who want to use these platforms to speak about social injustices or organize a protest. In terms of their influence on public debate and the lived experience of their users, the norms imposed by these platforms are likely to be more important than the legal constraints under which they operate(Tarleton, 2017). With these inevitable and maybe unresolvable controversial politics rapidly populating their sites, social media companies have had to not only establish and revise their policies, but also more sophisticated ways of policing their sites.

Conclusion

The Internet has reached the majority of the world’s population, and as the user population has grown, so has the duration of user time and the amount of content generation and consumption. This massive amount of content has formed a huge impact on both large platforms and small companies. Management difficulty and audit challenges. The task of content evaluation will become increasingly difficult, and policy-related oversight will become increasingly strict. Although the problem of images and text content identification is increasingly being solved, comprehending audio and video material, both manually and automatically, will take a long time. Detection becomes considerably more challenging, especially when specific user usage situations and political and societal settings must be contacted.

Reference:

Roberts, S. T. (2019). Behind the Screen : Content Moderation in the Shadows of Social Media. Yale University Press. https://doi.org/10.12987/9780300245318

Gillespie, T. (2018). CHAPTER 1. All Platforms Moderate. In Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media (pp. 1-23). New Haven: Yale University Press. https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300235029-001

Gillespie, T. (2017) ‘Governance by and through Platforms’, in J. Burgess, A. Marwick & T. Poell (eds.), The SAGE Handbook of Social Media, London: SAGE, pp. 254-278.

Meghan, D. (2020). Why Government Involvement in Content Moderation Could Be Problematic. Retrieved from: https://impakter.com/why-government-involvement-in-content-moderation-could-be-problematic/

Seobility. (2021). What is a dofollow link? Retrieved from: https://www.seobility.net/en/wiki/Dofollow_Link

Seobility. (2021). Digital Analytics. Retrieved from: https://www.seobility.net/en/wiki/Digital_Analytics

Nick, Y. (2021). Creative Commons Keyboard Borders Image. Retrieved from: https://www.picpedia.org/keyboard/b/borders.html

This work is licensed under a Creative Commons Attribution 4.0 International License.