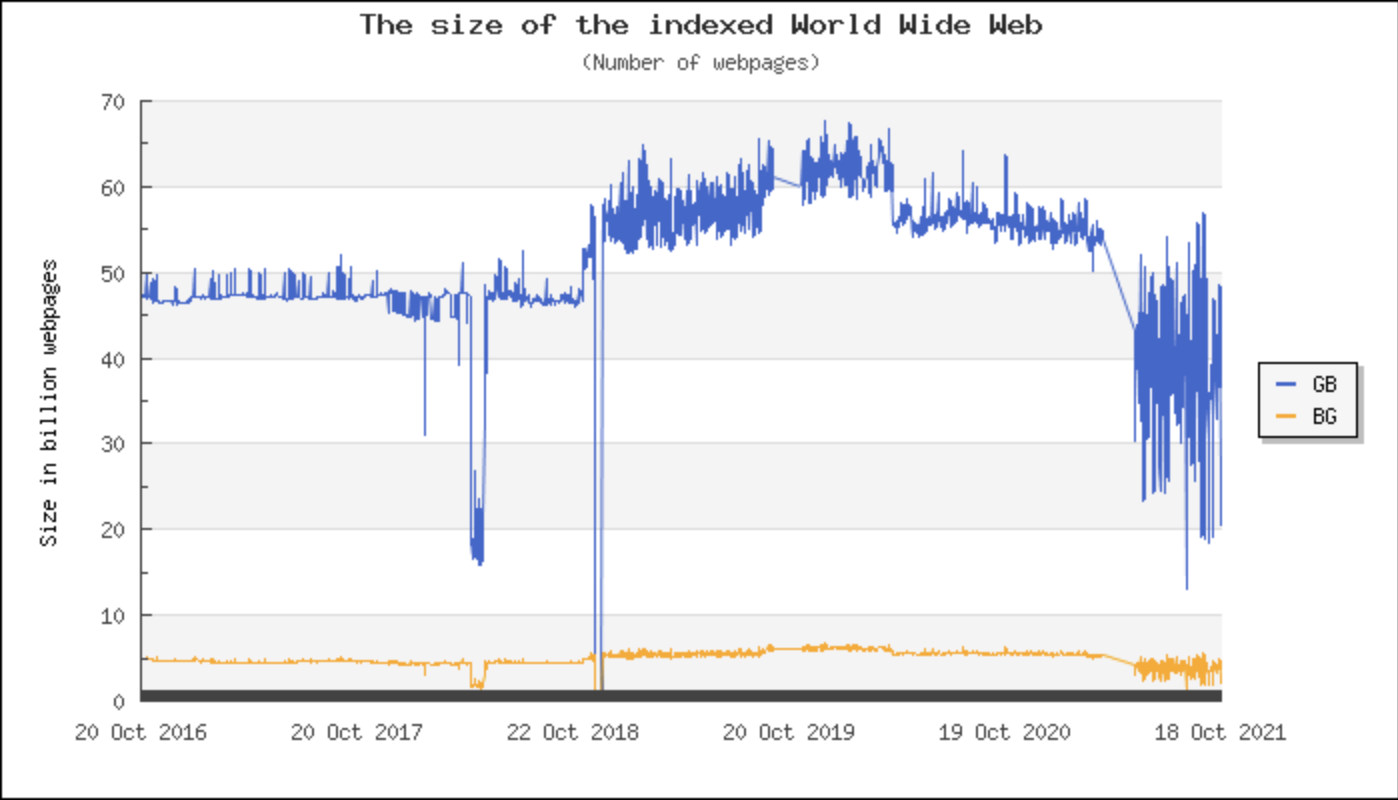

In 2007, Maurice de Kunder developed a method of estimating the number of pages on the internet- though restricted to those that were readily available through standard search engines. In 2007, the resulting figure was some 47 billion.

14 years later, the internet has only grown. 70 million new posts appear on WordPress every month. Over 350 million photos are posted on facebook every day. Over 500 hours of video is uploaded to Youtube every minute. Communication on an unprecedented scale comes with the unenviable task of moderating all this content across various different platforms to ensure it meets community standards- which, naturally, vary depending on the community in question. The result of this convoluted process is a constant stream of content that passes through many different filters of varying degrees of censorship. But why is Moderation even necessary? how does this moderation process occur? Who decides what makes the cut?

Content Moderation, ‘the organized practice of screening user generated content posted to Internet sites, social media and other online outlets, in order to determine the appropriateness of the content for a given site, locality, or jurisdiction’ (Roberts, 2017) has a simple purpose: to filter out user-submitted content that isn’t welcome in the platform on which it is posted. This can range from small things, like irrelevant comments on a tech support forum, to hate speech and abusive behaviour on large social media platforms. This is to ensure that a platform remains adherent to its purpose and values, and avoids exposing its users to content that may be unwelcome. Some argue, however, that moderation is a perversion of the right to free speech and expression, especially on larger platforms where political discussion take place as a part of a public forum. To illustrate this, a recurring concern about the nature of Content Moderation is the policing and censorship of Women and their bodies. In 2018, Tumblr banned the posting of any images of ‘female presenting nipples’. In 2016, Instagram limited the use of hashtag searches related to women of colour. Despite views of the internet’s reputation as a free and open place, it is much more like traditional media forms than it claims, as it continues to set adjust the goalposts on what constitutes acceptable social media conduct (Gillespie, 2010). Routinely, algorithms are put in place to enforce this standard of conduct, and routinely, these algorithms come up short, as evidenced by Tumblr’s ban on ‘NSFW’ content resulting in the flagging and removal of fully clothed selfies, pictures of whales and dolphins, and even Garfield, while ‘porn bots’ remained on the site unimpeded. (Pilipets, Paasonen, 2020). If these algorithms cannot be trusted to moderate online platforms, then perhaps the better option would be to employ real people?

‘Content Moderator’ is a career that likely isn’t considered or closely looked at by most. What sounds like a job of endlessly scrolling through Facebook posts and removing the occasional breastfeeding parent is in reality a constant stream of exposure to gore, abuse, and a spotlight on atrocities worldwide- all on a low-pay hourly wage. This process of persistent exposure is often extremely mentally draining and harmful, resulting in many workers having to seek psychiatric help (Roberts, 2019). This has led to major platforms such as Facebook and Youtube suffering leaks to major news sources about these working conditions to make the public aware of both the role’s disturbing reality and indeed its very existence, combatting the perception of the internet being policed by none but the almighty algorithm.

Facebook, referred to by Mark Zuckerberg in 2019 as “the digital equivalent of a town square”, seems to many to be the modern agora. The difference, however, is that unlike the archetypical town square where one might stand and express their thoughts to the general populace, on Facebook one’s speech is a commodity- and algorithms direct where that speech might be heard to maximise its value in a process called ‘algorithmic audiencing’ (Reimer, Peter, 2021). With this process comes the warping of an individual’s thoughts and ideas to serve the monetary gain of another, interfering with the relationship between the speaker and audience by acting as an intermediary that spreads or silences voices as needed to maximise profit. While less noticeable as a form of interference when compared to conventional moderation, algorithmic audiencing nonetheless curtails free speech by diverting and distorting the distribution of messages to specific audiences, supressing or highlighting particular ideas in a subtle but no less effective manner.

So what, then, is the answer to Content Moderation? It is undoubtedly necessary to monitor user submitted content to weed out scams, abuse, hate speech and countless more harmful things amongst millions of posts, though it also threatens to impede on freedom of speech and has the potential to stifle the voices of marginalised communities. Algorithms prove to be, at least for the time being, either woefully ineffective or overzealous and indiscriminate in what is purged, while commercialised content moderation exploits workers and endangers their mental wellbeing. Perhaps we lobby for better working conditions for moderators. Perhaps we redouble our efforts in creating software to do the job. Preferably, we do both. What is clear is that the internet is the world’s new frontier, and it seems, at least for the time being, that it is not yet tamed.

References

Roberts, S.T. (2017) Content moderation. In: Schintler, LA, McNeely, CL (eds) Encyclopedia of Big Data. New York: Springer, pp. 44–49.

Gillespie, T (2010) The politics of ‘platforms’. New Media & Society 12(3): 347–364.

Pilipets, E., & Paasonen, S. (2020). Nipples, memes, and algorithmic failure: NSFW critique of Tumblr censorship. New Media & Society. https://doi.org/10.1177/1461444820979280

Roberts, S. T. (2019). Behind the Screen: Content Moderation in the Shadows of Social Media. Yale University Press.

Riemer, K., & Peter, S. (2021). Algorithmic Audiencing: Why we need to rethink free speech on social media.

Dewey, C. (2015, May 18). If you could print out the whole internet, how many pages would it be? The Washington Post. Retrieved October 15, 2021, from https://www.washingtonpost.com/news/the-intersect/wp/2015/05/18/if-you-could-print-out-the-whole-internet-how-many-pages-would-it-be/.

Ho, K. (2020, May 5). 41 up-to-date Facebook facts and stats. Wishpond. Retrieved October 17, 2021, from https://blog.wishpond.com/post/115675435109/40-up-to-date-facebook-facts-and-stats#:~:text=On%20average%20350%20million%20photos%20are%20uploaded%20daily%20to%20Facebook.

How many blogs are published per day? (2021 update). Envisage Digital. (2020, December 31). Retrieved October 17, 2021, from https://www.envisagedigital.co.uk/number-blog-posts-published-daily/.

More than 500 hours of content are now being uploaded to Youtube every minute. Tubefilter. (2019, May 7). Retrieved October 17, 2021, from https://www.tubefilter.com/2019/05/07/number-hours-video-uploaded-to-youtube-per-minute/.

The size of the World Wide Web (the internet). WorldWideWebSize.com | The size of the World Wide Web (The Internet). (2007). Retrieved October 16, 2021, from https://www.worldwidewebsize.com/.