The prevalence of child pornography, terrorism, and violent content on the Internet arguably attribute to users’ free speech on social media platforms. Not only can platforms not survive without moderation, but they are also not platforms without it (Gillespie, 2019, p. 21). Although platforms and governments have had several attempts, there are still obstacles. This article argues that content moderation is indispensable to social media platforms since a mild environment keeps users and ensures sustainable development. This article will explore the social media platforms’ predicament on content moderation. Moreover, there will be discussions about existing attempts, debates, and government enforcement in content moderation.

Complicated equilibrium between platforms and users

The delicate degrees of intervention

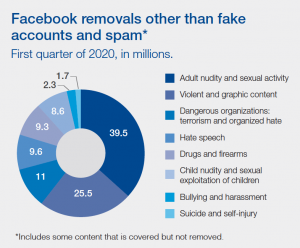

The intervention on user-created content is delicate as too rigorous or mild content moderation will rush users away from social media platforms (Gillespie, 2017). Two divergences are flowing around. Some assert that platforms’ inaction or permissiveness is accountable for the pervasive extreme content (Gillespie, 2019). For instance, Facebook claims in their Community Standard Enforcement Report (2019) that they have improved automated tools that identify and remove children exploit content. However, notably, the reported contents ascended from 5.8 million in Q1 to 11.6 million in Q3. Thus, Mark Zuckerberg, the CEO of Facebook, is mired in questions about regulatory failure.

“You are not working hard enough, and end-to-end encryption is not going to help the problem.”, says Ann Wagner (Nuñez, 2019).

Others argue that social media platforms’ content moderation, no matter automated tools or human labour, is blamed for being too sensitive and lacking objective judgment, leading to some miscarriage of justice on some contents with positive meanings. Statistics show that Facebook examines three million posts a day, and Zuckerberg recognized the 10% mistake rate, which means 300,000 posts are removed for wrong reasons accidentally (Paul M., 2020, p.5). Automated detections remain computational limitations in making judgments according to various contexts (Roberts, 2019). It is reported that Tumblr flagged a drawing of troll socks, a carton scorpion, and a cat comic as adult content because of algorithms’ bias in Tumblr’s automated content system (Matsakis,2018). The mistakes in punishing innocent content have raised users’ disappointment on Tumblr’s moderation regimes.

Tumblr’s AI is basically a stoned 13 year old boy. Everything looks like a butt. #ToosexyforTumblr pic.twitter.com/xWf4rpKiZH

— Taylor Agajanian (@CyborgLibrarian) December 6, 2018

Embedded Media 1 Twitter post (Agajanian, 2018)

It is tricky to manipulate the degree of interventions on user-created content. Too indigent moderation policy will stimulate platforms into the hotbed for “toxic technoculture” (Massanari, 2017), which degrades audiences’ using experience. While intensive intervention will harm the diversity and inclusiveness of platforms, making users disappointing and excluded. Platform users who seek health and open digital environments will leave for both reasons above. Losing users is a disaster for commercial social media platforms in sustainable profiting and development.

Contradictable values on content

Content moderation is demanding since social media platforms, and users have distinct value orientations and objectives on content, indicating the difficulty of designing a moderating system that fits every party’s benefits. Commercial platforms will selectively omit some breaching content to concentrate more traffic. Massanari (2017) provides a case that Reddit, as an open-source platform, permitted the distribution of celebrities’ nudes since the considerable income from subscribers’ views. As a commercial platform, interests are their primary consideration. Some user-created content violates rules or ethical standards while it also generates enormous clicks and views. Platforms are likely to turn a blind eye to offending content because of the benefits of monetization. Such orientation has been embedded in the “platform politics”, which includes designs, norms and, policies that incent users to participate (Massanari, 2017). Driven by commercial platforms’ business model, they rarely disclose the beneath algorithm and moderation systems to escape commercial competition and public scrutiny (Roberts, 2019).

The cultural information gap between content moderators and users risks the diversity and openness of social media platforms. Although human beings can make decisions based on diverse contexts, the subjectivity of human moderators is still unavoidable. The expansion of user base not only indicates the ascending business scale, but also the complexity and diversity of communities. It is sticky for content moderators to comprehend expressions from all users. For example, research shows that 41% of Silicon Valley tech firms’ employees are well-educated white males (Center for Employment Equity, 2019). Therefore, it is biased to regulate numerous contexts and meanings with a single cultural background and logic. Consequently, some minority communities and the content will be marginalized, resulting in the loss of users and query of exclusiveness and openness.

Algorithms and human in moderating

The overreliance on automated tools is harmful to platform content moderation since algorithms are insensitive and untimely. In the shooting that happened in New Zealand in 2019, the crime was livestreamed the shooting on Facebook and relevant videos were distributed on Twitter and YouTube. This event and the flowing videos, which can still be found on Twitter a few hours later after the attack (Marsh & Mulholland, 2019), had arose great public shock and questions about the validity of content moderation. Aiming at stimulating users’ participation, platforms rarely set any pre-screening for images and videos that users uploaded (Roberts, 2019). Ultimately, the appropriateness of content cannot be ensured. If the terrorism video is akin to video games, automated tools are hard to differentiate them (Pham, 2019). The controversies about moderating content were in the spotlight again: should we use automated tools? What is a good combination of human moderation and algorithms?

Social media platforms should not hand over all the obligation of content moderation to algorithmic tools. With the further development of platfomization, it seems that only automated tools are efficient and sensitive enough to handle the immense content moderation. Nevertheless, the algorithmic machines make judgments by inputting, which indicates its discrepancy of sensibility and reasoning in different contexts. Since the outbreak of Covid-19, platforms like Twitter have replaced human moderators with a more significant proportion of AI. They admitted that the absence of human moderators would decrease the relevance of context and content in moderation (Vijaya & Drella, 2020).

Automated tools should be used to reduce some unnecessary or harmful workload for content moderation employees. Human content moderators bear the brunt of immense violent and bloody content, exposing to which has considerable psychological damage (Roberts, 2019). Human moderators are indispensable for commercial content moderation; however, the high-intensity, high-risk, and the low-paying job is an undeniable fact. Gray, a content moderator of CPL, got only $15.39 for his 8-hour night shift (Shead, 2020). Content moderators are hidden figures behind the screen and public views while making a significant contribution to social media platforms’ environment (Roberts, 2019). However, the overlook of their psychological health and benefits has put them at risk. Social media platforms must think about optimizing automated tools in pre-screening harmful content for human moderators in advance.

Video 2 “It’s the worst job and no-one cares” (BBC Stories, 2018)

The power of government should be restricted

Admittedly, social media platforms have drawbacks in content moderation in terms of transparency and biases, however, governments should not have too much power over content moderation since they lack technological literacy will bring about vagueness, overbreadth, and even oppressiveness in laws. Lawmakers are primarily illiterate in social media platform management, making the laws unclear in telling ordinary users what is permitted and forbidden (Mikaelyan, 2021). For example, The EARN IT Act compresses different laws about child sexual exploration in 50 states in the US to respond to the same conduct (Mikaelyan, 2021). Such an approach is not rigorous enough, which will reduce the efficiency of law enforcement and lead to more debate about the standards of content moderation.

Looking ahead

The platform still has many problems with self-regulation, while enforcing government intervention is not readily to solve the issue. The cooperation between governments, platforms, and users should be strengthened to address controversies on content moderation. Mikaelyan (2021) argues that we should establish a mediator committee to negotiate between the government, platforms, and users to create a commercial content moderation standard based on voluntary rules. Content moderation is an urgent and complex work, in which people still have a long way to go.

This work is licensed under a Creative Commons Attribution 4.0 International License.

References

Gillespie, T. (2017). Governance by and through platforms. In J. Burgess, A. Marwick & T. Poell (eds.), The SAGE Handbook of Social Media (pp. 254-278), London: SAGE.

Gillespie, T. (2019). All Platforms Moderate. In Custodians of the Internet (pp. 1–23). Yale University Press. https://doi.org/10.12987/9780300235029-001

Rosen, G., & VP Integrity. (2020, August 11). Community Standards Enforcement Report, November 2019 edition. About Facebook. Retrieved October 15, 2021, from https://about.fb.com/news/2019/11/community-standards-enforcement-report-nov-2019/.

Marsh, J., & Mulholland, T. (2019, March 16). How the Christchurch terrorist attack was made for Social Media. CNN. Retrieved October 14, 2021, from https://edition.cnn.com/2019/03/15/tech/christchurch-internet-radicalization-intl/index.html.

Massanari, A. (2017). Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

Matsakis, L. (2018, December 5). Tumblr’s porn-detecting AI has one job-and it’s bad at it. Wired. Retrieved October 14, 2021, from https://www.wired.com/story/tumblr-porn-ai-adult-content/.

Mikaelyan, Y. (2021). Reimagining content moderation: section 230 and the path to industry- government cooperation. Loyola of Los Angeles Entertainment Law Review, 41(2), 179–214.

Nuñez, M. (2019, November 14). Facebook and Instagram removed more than 12 million pieces of child porn. Forbes. Retrieved October 14, 2021, from https://www.forbes.com/sites/mnunez/2019/11/13/facebook-instagram-child-porn-removal-mark-zuckerberg-ook-and-instagram-was-wider-than-believed/?sh=60df28092158.

Paul, B. M. (2020). Who moderates the social media giants? A call to end outsourcing. issuu. Retrieved October 14, 2021, from https://issuu.com/nyusterncenterforbusinessandhumanri/docs/nyu_content_moderation_report_final_version?fr=sZWZmZjI1NjI1Ng.

Pham, S. (2019, March 15). Facebook, YouTube and Twitter struggle to deal with New Zealand Shooting Video. CNN. Retrieved October 14, 2021, from https://edition.cnn.com/2019/03/15/tech/new-zealand-shooting-video-facebook-youtube/index.html.

Sarah T. Roberts. (2019). Understanding Commercial Content Moderation. In Behind the Screen (p. 33–72). Yale University Press.

Shead, S. (2020, November 13). TikTok is luring Facebook moderators to fill new trust and Safety Hubs. CNBC. Retrieved October 14, 2021, from https://www.cnbc.com/2020/11/12/tiktok-luring-facebook-content-moderators.html.

UMass-Amherst, C. for E. E. (2019, June 26). Is Silicon Valley Tech Diversity Possible Now? SSRN. Retrieved October 14, 2021, from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3407983.

Vijaya, & Derella, M. (2020). An update on our continuity strategy during COVID-19. Twitter. Retrieved October 14, 2021, from https://blog.twitter.com/en_us/topics/company/2020/An-update-on-our-continuity-strategy-during-COVID-19.