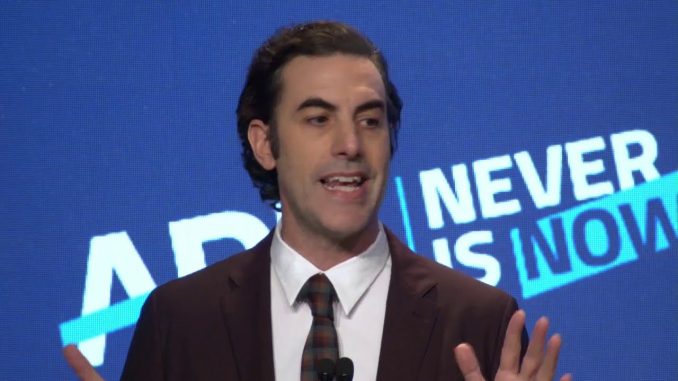

With the development of the internet in modern society, the implementation and growth of various social media platforms allow the public to access information much easier than before. With this boom of the industry comes potentially harmful and misinformed information. The policing of these online posts is extremely important as lies and deception can influence society and politics negatively. The need for content moderation on social platforms is highlighted in Sacha Baron Cohen’s speech at the Anti-Defamation League (ADL) in 2019.

Throughout his speech, he attacks platforms such as Facebook and their algorithms saying that they “deliberately amplify the type of content that keeps users engaged” and that “it’s why fake news outperforms real news because studies show that lies spread faster than truth.” (Cohen, 2019). The necessity for content moderation on these online platforms is also a hot topic within the academic writing space, and its importance will be displayed in this article through those academic findings.

What is content moderation?

The ideology of content moderation is explored heavily throughout Tarleton Gillespie’s chapter titled ‘All Platforms Moderate’. Throughout this chapter, Gillespie discusses platforms such as Facebook, Twitter and the content available on their sites. He defines these platforms as online sites and services that;

1) host, organize, and circulate users’ shared content or social interactions for them, 2) without having produced or commissioned (the bulk of) that content, 3) built on an infrastructure, beneath that circulation of information, for processing data for customer service, advertising, and profit. (Gillespie, 2018, p. 18)

He describes that social media platforms must moderate their content, in order to protect their users as well as maintain their face to their advertisers, partners and the public eye (Gillespie, 2018, p. 5). If content moderation is absent from a platform, they will subsequently lose their users and flow of income. Therefore, as described by Gillespie, “platforms are not platforms without moderation” (Gillespie, 2018, p. 21). Although it is evident that digital platforms require content moderation in order to be successful, content moderation is by itself a controversial topic. The difficulty for the platform is deciding how and why to interfere with content published on their platforms. Therefore, although platforms don’t create the large majority of the content on their platform, they do make important choices about them (Gillespie, 2018, p. 19).

Within these social media platforms are a team of content moderators, who are responsible for deciding whether reported content should stay in cyberspace circulation. It is apparent that these content moderators “must maintain sensitive judgment about what does or does not cross a line while also being regularly exposed to— traumatized by—the worst humanity has to offer.” (Gillespie, 2018, p. 11).

In an interview conducted by VICE under their ‘Informer’ series, an ex-Facebook Moderator describes their day on the job and their mental health struggles after they left the company. The interviewee discusses their time working through dealing with the emotional trauma they experienced through their job, saying that “it took a long time to realise how much this stuff had affected me” (VICE, 2021). In 2018, a group of over 10,000 people sued Facebook for not creating a safe working environment, as the graphic images they were exposed to daily led to the development of PTSD. The lawsuit resulted in a $52 million settlement (Dwoskin, 2020). Without a team of content moderators, this disgusting and psychologically scarring imagery would be unfiltered and available for all to see. As evident by these ex-content moderators’ experiences, billions of people would potentially find mentally distressing material.

Controversies as a result of poor content management

Although it is established that for a digital platform to exist successfully they must implement a content moderation system, there are vastly different approaches being taken across our digital space. An interesting example of this variation is the social platform Reddit. Reddit has experienced a large volume of disapproval based on its “hands-off approach toward content shared by users” (Massanari, 2017 p. 331). The administrators in control of Reddit cite their role in content moderation as “neutral”, as to not impose or intervene in any way as to regulate discussion found on the platform (Massanari, 2017 p. 339). According to Massanari, these policies adopted by Reddit encourage the presence of ‘toxic technocultures’ which is the implicit or explicit harassment of others in an effort to push against ideas of diversity, multiculturalism, and progressivism (Massanari, 2017 p. 333). An example of these ‘toxic technocultures’ is the ‘Gamergate’ fiasco in 2014. A blog written by the ex-lover of female game designer Zoe Quinn was posted to Reddit. The post contained vast detail about their relationship and it was taken down by moderators of the subreddit. However, the blog post spread to another platform named 4chan. In this post, it was implied that Quinn’s success was found due to their intimate relationships with gaming journalists. Soon the hashtag #Gamergate appeared over social media and

“Quinn became the centerpiece and token figure in a hateful campaign to delegitimize and harass women and their allies in the gaming community” (Massanari, 2017 p. 334).

It is likely that Reddit’s hands-off approach and anonymous account nature contribute to this event. Since this event, Reddit has changed its content rules, prohibiting anything that is violence against a group of people, however inappropriate and potentially emotional scarring content is still seen on Reddit and more must be done in regard to content moderation to create a safer platform.

Another example that highlights the necessity for content moderation is the rise of ‘fake news’ in the media surrounding Donald Trump. Trump, who was the 45th President of the United States, was renowned on Twitter for sharing and spreading misinformation around the legitimacy of the 2020 election. The misinformation posted resulted in the banning of Donald Trump’s Twitter account. In a blog posted by Twitter titled, ‘Permanent suspension of @realDonaldTrump’ the reasoning behind the ban was outlined. Trump’s tweets about the storming of the Capitol which occurred on the 6th of January breached the ‘Glorification of Violence policy’ which is aimed to “prevent the glorification of violence that could inspire others to replicate violent acts” (Twitter Inc., 2021). The utilisation of fake and misleading information by Trump is potentially harmful to viewers in this advanced era of communication, where one invalid idea can spread rapidly across the digital world, amplifying the need for content moderation. This is highlighted through Sacha Baron Cohen’s time on the ‘Sway’ podcast, where he discusses his speech, one year before the presidential election at the 2019 ADL. Cohen’s motivation behind his speech was his fear that “Trumpism would win again by spreading lies, conspiracies, and hate through social media”. (‘Sway’, 2021). These fears expressed by Cohen highlight the reasoning why content moderation is so important on digital platforms, as even large scale politicians can convince so many people that lies are truths.

The need for government influence on digital platforms

In my opinion, due to the major importance of content moderation in the digital age, governments should have a greater role in enforcing restrictions on social media platforms. Many governments around the globe have already implemented online safety legislation such as Germany, the UK and most recently Australia. The ‘Online Safety Act 2021’ will come into effect in January 2022. The act aims to keep services providers accountable for user content “that is defamatory, copyright infringing, or in breach of other laws regulating offensive content.” (Johnson Winter & Slattery, 2021). Giving the government power to hold these social media platforms responsible will reduce the hateful and disturbing content that is present. An example of this is discussed in the journal, ‘Internet regulation as media policy: Rethinking the question of digital communication platform governance’, with the gross discrepancy between YouTube’s tolerance of extremist content vs copyrighted content (Flew, Martin & Suzor, 2019). The failure in proper content management from YouTube has allowed extremists to have a large voice in the digital age and generate revenue through their misinformation and lies, which results in gullible people being led astray from the truth (Flew, Martin & Suzor, 2019).

To conclude, content moderation is an extremely important element of the digital age. The utilisation of content moderations on social media platforms allows users to communicate with people across the world in a safe environment, without the threat of disturbing and deceptive resources.

![]()

References

Cohen, S. B. (2019, November 3). Sacha Baron Cohen Slams Social Media Companies: “Your Product Is Defective” | NBC News. Retrieved from www.youtube.com website: https://www.youtube.com/watch?v=iZTOy70m-28&ab_channel=NBCNews

Creative Commons. (2019). CC Search. Retrieved from Creativecommons.org website: https://search.creativecommons.org/

Dwoskin, E. (2020, May 13). Facebook content moderator details trauma that prompted fight for $52 million PTSD settlement. Washington Post. Retrieved from https://www.washingtonpost.com/technology/2020/05/12/facebook-content-moderator-ptsd/

Flew, T., Martin, F., & Suzor, N. (2019). Internet regulation as media policy: Rethinking the question of digital communication platform governance. Journal of Digital Media & Policy, 10(1), 33–50. https://doi.org/10.1386/jdmp.10.1.33_1

Gillespie, T. (2018). All Platforms Moderate. In Custodians of the Internet : Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media (pp. 1–23). New Haven, CT: Yale University Press.

Massanari, A. (2016). #Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

‘Sway’. (2021, February 25). Opinion | Sacha Baron Cohen Has a Message for Mark Zuckerberg. The New York Times. Retrieved from https://www.nytimes.com/2021/02/25/opinion/sway-kara-swisher-sacha-baron-cohen.html

Twitter Inc. (2021, January 8). Permanent suspension of @realDonaldTrump. Retrieved from blog.twitter.com website: https://blog.twitter.com/en_us/topics/company/2020/suspension

VICE. (2021, July 22). The Horrors of Being a Facebook Moderator | Informer. Retrieved October 15, 2021, from www.youtube.com website: https://www.youtube.com/watch?v=cHGbWn6iwHw&ab_channel=VICE