‘Global Techlash’, a term coined by the Economist “against the once-feted corporate titans of Silicon Valley” (Flew, 2018, p26), is the growing political backlash associated with the rise of digital technologies; in particular the way they impact journalism and the spread of information.

Over the past decade digital technologies have become ubiquitous in our everyday lives. We have seen the rise of social media platforms as critical tools for connecting with our friends, sharing updates with loved ones and accessing news. Platforms have become a major source of the information we access, including the information that was previously accessed from credible and reliable sources, such as the media. When these platforms were developed, the aim was to create a free place that provided value, in order for users to remain active as long as possible. The longer the time invested in the platform, the higher the value of the platform sold to advertisers. This has formed one of the examples of techlash.

Public Concerns driving Techlash

Engaging and valuable content became a key driver for profit which led to expanding outside sharing information between friends and family, to incorporating news. However, the difference between a news platform and a social media platform sharing news, is that news sites are required to meet Media Policy requirements specifically related to the accuracy and credibility of the information.

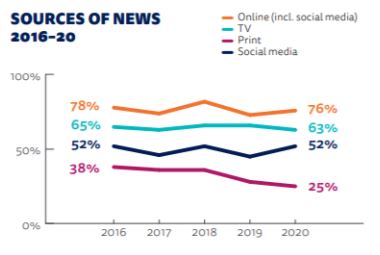

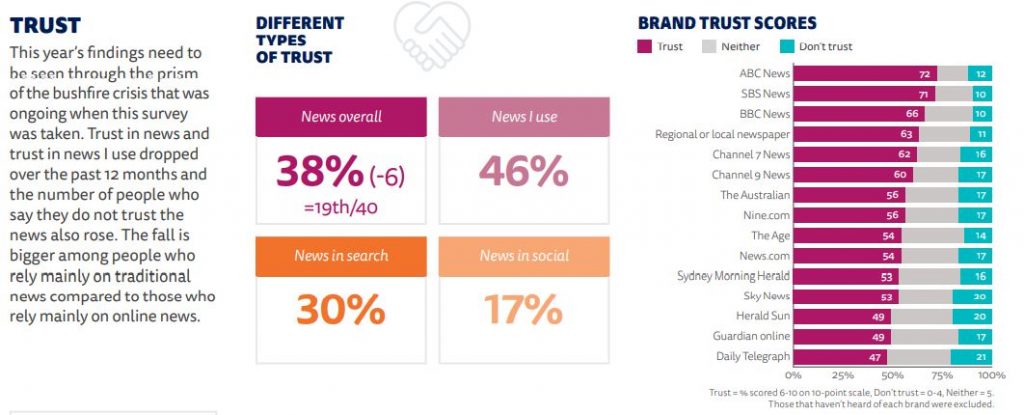

In Australia in 2020, 76% of people accessed their news online, 52% from social media (Reuters institute Digital News Report 2020). This is in line with the growing global trend of online overtaking TV as the primary source of news. Mobiles have also overtaken computers to access with 58% of people accessing news on their mobile phones. Facebook continues to grow and is the the primary social media platform for news, 39% of Australians

Credible sources also joined in sharing news via social media platforms alongside all other content generators (Flew, 2018, p26). This saw a significant shift in the type of information people were consuming. Within a liberal democracy we rely on news to remain informed and support us with our decision making. When the credibility and accuracy of this information changed, it created a major ethical dilemma and as a result, there is a rising concern amongst the public, who are calling for more to be done from tech giants.

Digital platforms have algorithms to edit and curate content for various reasons; including to maintain user engagement and to meet legal requirements.

The algorithms calculate what information is going to maintain the attention of the user the longest, and edit accordingly. Content moderation is an integral part to maintaining the engagement of the user and driving profit. Historically Digital Platforms have been able to maintain their role as purely vessels for sharing information, simply Technology and Service Providers. However due to rising awareness of misinformation and its potential harm to society, digital platforms are unable to continue to claim this defence. Digital platforms claim “neutral intermediaries” , thanks to section 230 of the Communication Act 1996 in the US. In essence it claims technology companies must not interfere with content generation, however are able to moderate when required. For example, inappropriate content could be removed. This ‘safe harbour’ provision protects platforms from any associated liability related to content shared. However due to the design of the platforms, continually tailoring content there is debate over whether this behaviour is more similar to a publisher than a technology company (Flew, 2018, p26).

Here are examples of techlash and growing public concerns:

A world without facts means a world without Truth and without trust

Nobel peace prize winner Maria Ressa: ‘A world without facts means a world without truth’ – video. Source: The Guardian – https://www.theguardian.com/technology/2021/oct/09/facebook-biased-against-facts-nobel-peace-prize-winner-philippines-maria-ressa-misinformation

This month, journalists Maria Ressa and Dimitry Muratov both leading independent journalists in their home countries were awarded the Nobel Peace Prize for recognising “the vital importance of an independent media to democracy and warned it was increasingly under assault” highlighting that “a world without facts means a world without truth and without trust”.

Through regulation of the industry, traditional media platforms are held accountable for spreading unbiased, fact based information. Information that a liberal democracy deems critical for enabling informed decision making amongst it’s civilian population. Digital Platforms have adopted automated means to maintain the attention of its users regardless of whether the content is credible. Using a “black box” of algorithmic content distribution and data management is the crisis of trust in social institutions (Flew, 2018, p26), stemming from misinformation and lack of truth. This can be seen in Australians trust in news dropping to 38% (Reuters institute Digital News Report 2020).

Facebook favouring profits over public interests

This month, an ex-employee from Facebook has spoken out, leaking documents demonstrating how the tech giant “was lying to the public that it was making significant progress against hate, violence and misinformation”.

Through the use of company policies, the employee claims that Facebook incentivises employees to maintain the engagement of its users, not in a malevolent way, but in order to protect the revenues gained through its advertising model valuing engagement over all else.

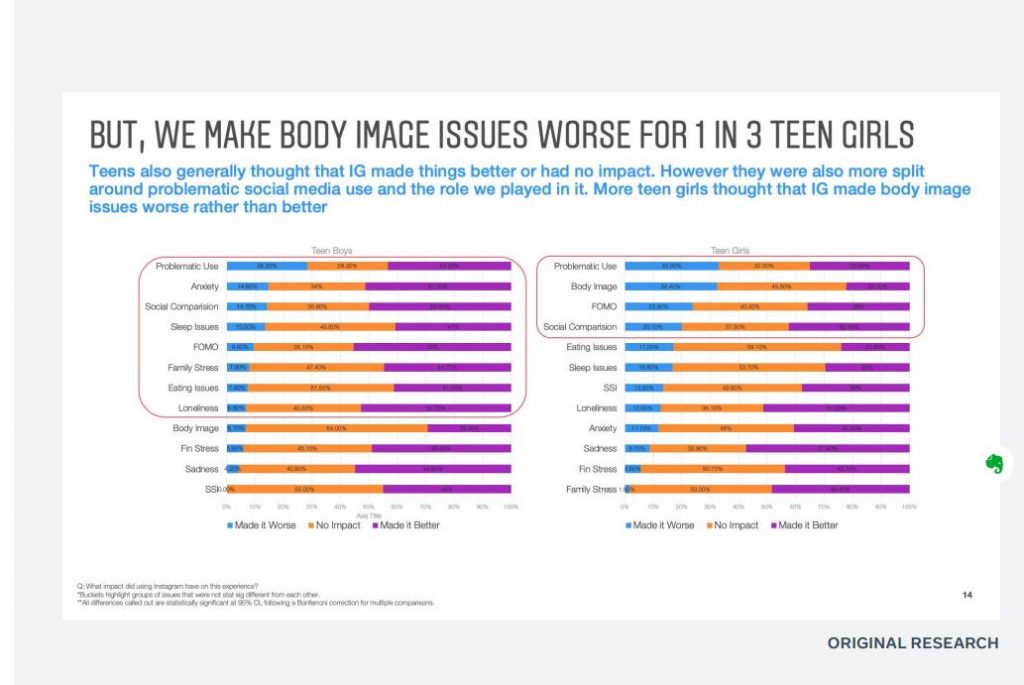

Included in the documents leaked was specific data collected via internal research highlighting associated harmful effects between instagram and teen mental health. This is another example of how the tech giants through their ability to spread information to a high number of users has wider reaching, societal consequences increasing public concern. In this example the research claims that for teenage girls, instagram made body image issues worse rather than better.

Instagram-Teen-Annotated-Research-Deck-1.pdf. Source: The Guardian – https://www.theguardian.com/technology/2021/sep/29/facebook-hearing-latest-children-impact

The role of Governments, Civil Society Organisations and Technology Companies as we move forward

Using an independent third party oversight approach we can start to determine what defines inappropriate content and who owns the responsibility of the consequences associated.

The existing policies on content distribution and ownership protect digital platforms from any liability associated, whilst allowing them to still moderate, disable and remove content (Flew, 2018, p26). It is becoming more and more obvious that social media platforms are not just technology companies. They distribute and manage the accessibility of news and information related to political and economic affairs. Media Policy also requires a refresh in order to remain relevant and adequate in today’s current digital media landscape and the way people consume information. Policies that were created when digital platforms were purely facilitators of content are no longer relevant given the redistribution of power and influence they have on societal, economic and political factors.

Initial identified areas of concern include (Flew, 2018, p29)

- Consumer and data protection

- Anti competitive practices

- Political advertising

- Fake news

- Online hate speech

- Social media generating online filter bubbles

- Traditional media industry job losses

- Digital platform addiction

Independent regulation cannot create change alone, but by using the methods agreed to manage non-compliance it can reinforce the necessary behaviours expected online as agreed by both parties.

Soft Law is a concept introduced to combat this area of unknown, where regulation is still required to be developed. It enables a collaborative approach by both government and industry engaging an independent third party. In this example provided by the Contract for the Web. A framework of general rules and laws are provided that allow the industry to use when shaping the operations of their business. An additional third party is required as an oversight body to sit between both governments and industry that recognises the challenges of applying existing regulations to industries that do not fit the traditional criteria. In order for soft law to work it requires cooperation amongst regulated entities to abide by the framework and necessary means to address non-compliance.

Co-regulation alone without the third party is unlikely to work as there is too much at stake for both parties. Governments, through it regulations need to change the way they view existing business values and digital platforms need to consider the ethical implications (Flew, 2018, p29).

Based on the examples provided, its evident that alongside the acceleration of digital technologies there is societal, economic and political backlash in relation to how we consume and trust information. Techlash is causing legitimate public concern and I would expect to see more collaboration across industries, governments and nation states to combat this complex new environment.

References

Berners-Lee, T. (2019). 30 years on, what’s next #ForTheWeb?. Retrieved from the World Wide Web Foundation website: https://webfoundation.org/2019/03/web-birthday-30/Cern. Tim Berners-Lee’s proposal. Retrieved from http://info.cern.ch/Proposal.html

Contract for the Web, Contract for the Web: a global plan of action to make our online world safe and empowering for everyone. Retrieved from https://contractfortheweb.org/

Facebook (2019), Teen Mental Health Deep Dive. Retrieved October 9, 2021, from https://about.fb.com/wp-content/uploads/2021/09/Instagram-Teen-Annotated-Research-Deck-2.pdf

Flew, T. (2018). Platforms on Trial. InterMEDIA, 46(2), 24-29.

Gillespie, T. (2017). Regulation of and by Platforms. In J. Burgess, A. E. Marwick & T. Poell (Ed.), The SAGE Handbook of Social Media (pp 254-273). London: SAGE Publications.

Kari, P., & Milmo, D. (2021). Facebook putting profit before public good, says whistleblower Frances Haugen. The Guardian website https://www.theguardian.com/technology/2021/oct/03/former-facebook-employee-frances-haugen-identifies-herself-as-whistleblower#

Milmo, D., & Paul, K. (2021). Facebook disputes its own research showing harmful effects of Instagram on teens’ mental health. The Guardian Website https://www.theguardian.com/technology/2021/sep/29/facebook-hearing-latest-children-impact

Reuters Institute for the study of Journalism. (2020). Digital News Report 2020. Oxford, UK: University of Oxford.

Savage, M., (2021). Facebook is ‘biased against facts’, says Nobel prize winner. The Guardian website https://www.theguardian.com/world/2021/oct/08/journalists-maria-ressa-and-dmitry-muratov-win-nobel-peace-prize