Content moderation is if the online monitor and screen the user-generated content that is based upon platform-specific guidelines and rules for determining if content must be published within the online platform. When any content is submitted to a website by any user, that content would go through a screening process for ensuring that content upholds regulations of the website isn’t harassing, inappropriate or illegal. Content moderation as the practice is quite common over online platforms which depend heavily on user-generated content, like forums and communities, dating sites, sharing economy, online marketplace, and platforms of social media (Myers West, 2018). There are several different types of content moderations, such as automated moderation, distributed moderation, reactive moderation, pre-moderation, and post-moderation. Appropriate moderation methods should be selected for the needs. It would help in creating a clear picture of what is needed from the moderation method along with the setup. The guidelines and rules of content moderations should be clear to all who are involved directly with the content moderation of the online platform.

Issues of digital platforms with content moderation

The legal and social norms around speech have totally evolved over the last couple of years and in several cultural contexts. The platforms could be in adolescence as well as online speech is the recent development. The challenges that the platforms are facing are the issues that have taken many years for figuring out, along with are far from getting resolved in all other media fields. Several large platforms adopted the approach of hands-off to content moderation (Gorwa, Binns, & Katzenbach, 2020). The platforms were not prepared for how the approach could play out in several social, legal, and political systems, and in the conflictual environments steeped in the racial tensions. This has taken much time along with many international missteps for realizing that in best conditions, this approach leads to the issues; however, putting the hands-on causes problems also.

Figure 1: Social media week by Schiller is licensed under CC BY-NC-SA 2.0

As the platforms come to the terms with the global footprints, the platforms are responding, from squashing harmful content to roll out new features along with interventions. The increasing platforms are adapting the industrial approaches to content moderation, contracting numerous moderators along deploying the identification software. Frontline moderators along with software could be overseen by the in-house policy teams that are hired for dealing with the rule changes, public backlash, and emerging problems (Caplan, 2018). As the platforms build policies and teams, specific types of users along with content rise still to more direct or ad hoc assessments by the in-house moderation teams. In the last few years, the gap among how the platforms moderate several users of the services versus few grabbing the attention with charged commentary is growing.

Questions are raised by the existing arrangements about how the speech of many is lost within a complex and large-scale system where this could be tough in being sensitive to context, language, and culture. While censorship and over-moderation is an issue, content that the platforms decide in leaving up is crucial also. The failure of the platforms in taking action against the content violating the community standards or policies is a cause of the recent controversies of content moderation. The policies of the platform from policy use cases within the country of the incorporation without providing due consideration to the context in certain regions (Massanari, 2018). Another case in the point is stark difference within the thinking of the platforms about the obligations about hate speech and misinformation in the markets.

Attempts to implement content moderation

Psychological effects to view harmful content is properly documented, with the reports of the moderators experiencing symptoms of post-traumatic stress disorder (PTSD) along with other issues of mental health as the result of the disturbing content which is exposed to them (Banchik, 2021). Human abilities exceed state-of-the-art artificial intelligence (AI) for several tasks of data processing that include content moderation. There would be an improvement in AI, and use of the human computation would enable the organizations in delivering greater capabilities of the system. Growth within human computation creates also new economic opportunities. Human API highlights how human labor could be mediated along with obscured by the opaque APIs. These APIs are major to integrate the human work in such systems. Technical jargon might diminish or hide also the critical role of the human workers to power such systems, perpetuating the invisibility of the global workforce. Content moderation would fit a natural niche for human computation as content is tough to moderate automatically Gerrard, 2018. While the mechanisms of human computation enable now in calling the human workers in these cases, it seems also like sort of the task which one could wish for automation as exposure to the disturbing content could not harm the algorithm.

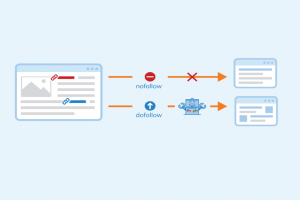

Figure 2: Do-follow links by Seobility is licensed under CC BY-SA 4.0

In the past two decades, the online platforms permitting the users in interacting along with uploading content for viewing have become integral to the lives of several people as well as have provided many benefits to society. Although, there is growing awareness between policymakers, businesses, and the public of the potential damage that is caused by the harmful online materials (Roberts, 2019). User-generated content (UGC) that is posted by the users contributes to the richness along with the variety of the content within the internet. It enables few users in posting content that could harm others, especially vulnerable people or children. As the quantity of UGC that is uploaded by the platform users continues to increase, this has become not possible in identifying along with removing harmful content with the use of human-led and traditional approaches at scale and speed necessary. Effective moderation of harmful content is quite a challenging issue for several reasons. While several challenges affect automated and human moderation systems, few are quite challenging for the automation systems based on AI to overcome (Roberts, 2018). There exist a wide range of content that is potentially harmful that includes child abuse material, hate speech, violent content, sexual content, spam content, and graphic content. Few harmful contents could be identified through analyzing content alone; however, other contents need an understanding of the context around this for determining if or not this is harmful.

Role of government to enforce restrictions on content moderation

The role of online speech and social media within civil society is under scrutiny. There is the contribution of social media to ethnic and religious violence. Harmful misinformation that includes about Covid-19 pandemic also has spread with speed and ease. Most famous platforms have similar policies of content moderation. They bar the posts which encourage or glorify violence, the posts which are sexually explicit, along with posts that include hate speech, that they define to be attacking any person for the race, sexual orientation, or gender. The main platforms also have taken steps in limiting disinformation that includes fact-checking posts, banning political ads, and labeling accounts of the state-run media (Samples, 2019). Such platforms comply with laws of countries in where they operate that could restrict speech more.

Figure 3: AI Cartoon to assist with content moderation by Clapper is licensed under CC BY-SA 4.0

Additionally, to use moderation software that is powered by AI, several organizations also employ numerous people for screen posting for the violations. The platforms don’t enforce the rules consistently. Several countries have proposed or implemented legislation for forcing social media organizations in doing more to policing online discourse. Speech on social media tied directly to violence might be regulated by the government. Preventing harms that are caused by hate speech or fake news lies beyond the jurisdiction of the government.

Through the content moderation, every review, picture, and comment could be identified as well as used later as the promotional material. In recent times, the platforms of social media took a strong stand against political advertising. Today, active participation is wanted by the customers in everything. With the involvement of the customers, the organizations have immensely gained. An effective process of content moderation would maintain the sanity of the platform, leverage UGC for assisting other customers to make informed decisions, ensure a quality customer experience for all other users. However, there are several challenges in content moderation for the countries that should be addressed properly.

References

Banchik, A. V. (2021). Disappearing acts: Content moderation and emergent practices to preserve at-risk human rights–related content. New Media & Society, 23(6), 1527-1544. Retrieved from https://doi.org/10.1177%2F1461444820912724

Caplan, R. (2018). Content or context moderation?. Retrieved from https://apo.org.au/node/203666

Clapper, L. (2020). AI Cartoon to assist with content moderation. Retrieved from https://commons.wikipedia.org/wiki/File:AI-edits.jpg

Fagan, F. (2020). Optimal social media content moderation and platform immunities. European Journal of Law and Economics, 50(3), 437-449. Retrieved from https://doi.org/10.1007/s10657-020-09653-7

Gerrard, Y. (2018). Beyond the hashtag: Circumventing content moderation on social media. New Media & Society, 20(12), 4492-4511. Retrieved from https://doi.org/10.1177%2F1461444818776611

Gorwa, R., Binns, R., & Katzenbach, C. (2020). Algorithmic content moderation: Technical and political challenges in the automation of platform governance. Big Data & Society, 7(1), 2053951719897945. Retrieved from https://doi.org/10.1177%2F2053951719897945

Massanari, A. (2017). # Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New media & society, 19(3), 329-346. Retrieved from https://doi.org/10.1177%2F1461444815608807

Myers West, S. (2018). Censored, suspended, shadowbanned: User interpretations of content moderation on social media platforms. New Media & Society, 20(11), 4366-4383. Retrieved from https://doi.org/10.1177%2F1461444818773059

Roberts, S. T. (2018). Digital detritus:’Error’and the logic of opacity in social media content moderation. First Monday. Retrieved from https://doi.org/10.5210/fm.v23i3.8283

Roberts, S. T. (2019). Behind the screen. Yale University Press. Retrieved from https://doi.org/10.12987/9780300245318

Samples, J. (2019). Why the government should not regulate content moderation of social media. Cato Institute Policy Analysis, (865). Retrieved from https://ssrn.com/abstract=3502843

Schiller, A. L. (2010). Social Media Week: Private in Social – Plattform – vs Nutzerverantwortung. Retrieved from https://www.flickr.com/photos/frauleinschiller/4328523884/

Seobility. (2021). What are Dofollow Links?. Retrieved from https://www.seobility.net/en/wiki/Dofollow_Link

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.