Example

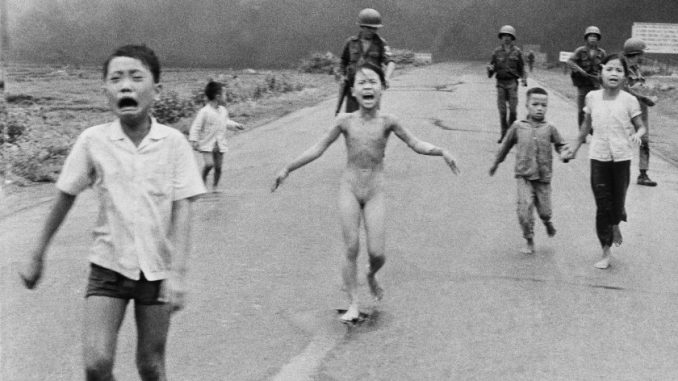

In 2016, a photo of a Napalm girl posted by a Midland photographer was deleted from Facebook because the girl in the photo was naked and had a painful expression, which violated the child nudity policy. This photo depicts the real tragedy during the Vietnam War. This is an important document of the Vietnam War. After the photo was deleted for more than a week and was criticized by global reports, Facebook restored the photo.(Gillespie, 2018)

Everything is either black or white, there will always be grey areas, and the supervision of digital platforms is the same, there will always be justice. What’s more, in such a large and complex network society, there are a thousand Hamlet in the eyes of a thousand people, and everyone has a different perspective.

Usually the most successful art somehow has a relationship with sex and even obscenity. It is difficult to judge whether it is educational art or bad creation that needs to be banned. For example, the paintings of art masters often depict nudes. There is even the term “violence aesthetics”, which also combines aesthetics with violence, so the boundaries of content review standards are blurred and difficult to be accurate.

Challenges of content moderation

A challenge facing the platform is when, what content, and how to intervene and maintain moderation.(Gillespie, 2018) Should publishers who post dangerous and marginal content be warned, or should they be forced to delete or similar sanctions, such as banning or freezing their accounts? Should it be dealt with before being complained, or should content isolation be implemented after viewer complaints? Moderate intervention has blurred the boundaries, and there is a double risk of losing users.(Gillespie, 2018) Too much intervention will make users lose a certain degree of freedom of speech and the pleasure of colliding opinions online. Too little intervention can lead to illegal, irregular and distorted value. The environment can also cause audience loss.

Establishing and implementing a content review system that can solve both extremes at the same time is another biggest challenge facing the platform.(Gillespie, 2018) And the content reviewer must have a sensitive awareness of judging whether the content is out of bounds. This is a rigorous job that has jumped to the edge of illegality, with a low rate of return and a large amount of labor, but it may take risks. Therefore, content reviewers must be very objective, not just judge by their own values, but must integrate the entire social civilization to carry out this work. How to formulate a system and what standards to formulate are also issues that need to be resolved urgently.(Gillespie, 2018) The system represents the company’s values, and how to balance order and interests is the core of the system. Of course, the system is not completed in a day. It needs to be gradually improved and perfected in the daily conflicts on the Internet. So another question arises. Should we listen to the suggestions of some active users? Can they represent the opinions of the remaining majority of silent users?(Gillespie, 2018) There is no way to be absolutely. Therefore, the platform can only achieve reasonable compromise and mediation among users with different values and expectations.

Attempts to implement content moderation

- Human moderator

The platform will hire dedicated full-time employees or companies to manually filter user content. However, this method is inefficient and cannot be completely consistent. At the same time, in the process of constantly browsing bad content, it will damage the mental health of reviewers. (Davis, 2020) - Keyword algorithm

The keywords of bad content are stored in the bad material library, and illegal content can be screened out through calculation tools such as automatic search for prohibited text.(Roberts, 2019) Or another way is to prohibit the posting of bad content when users generate content. Although the efficiency of machines is faster than that of humans, the database must be updated manually, and it is easy to circumvent, as long as the sensitive content is replaced with a similar vocabulary, it can be circumvented, such as replacing zero with “o”. (Davis, 2020) - Artificial Intelligence

Artificial intelligence that can read contextual clues can screen out violations and bad behaviours in different languages across language barriers. (Davis, 2020) This is an advanced version of the first two methods, which is smarter than computational filters and more efficient than manual filtering. At the same time, self-updating the material library through data analysis is the most practical method.(Roberts, 2019)

Why is it important?

If a platform’s content review system is not rigorous, it will be used as a weapon by disseminators of bad content, resulting in a large number of pornographic, violent and other prohibited content being published and reposted, creating a bad network environment. Disrupting the normal user experience, the continuous loss of users, resulting in no advertising and no revenue on the platform, and eventually closed down or forced to be removed. When users continue to create harmful content that is not well regulated, the platform will be criticized by the media, audiences, and the government, and will have to bear certain legal responsibilities. Effective content review can prevent damage to brand reputation and all information.

Should government play a big role?

The government should not have more power in content censorship. For example, it is well known that Trump is a political lunatic on Twitter, tweeting frantically, threatening North Korea with nuclear war. His tweets have also received everyone’s attention and discussion, but one of the content comments on Twitter is not to post threatening tweets. This alone is Trump’s violation of the censorship system. His tweets should be deleted or warned by censors, but this is not the case. Is it just because he is the president, he can be pardoned if he has political rights, and he doesn’t need to abide by Twitter’s guidelines? Therefore, the government should not be given more power, which has caused unfairness to all remaining ordinary users.

The political climate is not always cloudless, it depends on the current politicians. Their judgment on vague vocabulary has also become the standard for content review, that is to say, their standard is everyone’s standard. This also gave them the weapon of law. If they believe that users have offended them by criticizing the government, they will use laws to protect them from silence and control the voice of the masses. How to believe that the government has the resources and time to manage content censorship on digital platforms and monitor users’ freedom of speech in a fair and consistent manner remains to be considered. (Dawson, 2020)

However, government intervention can effectively protect users through legal means. Safely exercising rights in the digital world is everyone’s hope. The restrictions imposed by the state are based on the law and should be clear and non-discriminatory. The state needs to be transparent about restricted content, shared information, and access to data. Users should appeal decisions they consider unfair. Social media companies are often concerned and restricted by the state because they cannot delete bad content in a timely or complete manner. The company and the country should cooperate with each other to respect the rights of every user while protecting users. (Moderating online content, 2021)

<a rel=”license” href=”http://creativecommons.org/licenses/by/4.0/”><img alt=”Creative Commons Licence” style=”border-width:0″ src=”https://i.creativecommons.org/l/by/4.0/88×31.png” /></a><br />This work is licensed under a <a rel=”license” href=”http://creativecommons.org/licenses/by/4.0/”>Creative Commons Attribution 4.0 International License</a>.

Reference

Davis, L. (2020, December 15). 7 best practices for content moderation. Prevent Online Toxicity with Content Moderation. Retrieved October 15, 2021, from https://www.spectrumlabsai.com/the-blog/best-practices-for-content-moderation.

Dawson, M. (2020, February 26). Why government involvement in content moderation could be problematic. Impakter. Retrieved October 15, 2021, from https://impakter.com/why-government-involvement-in-content-moderation-could-be-problematic/.

Moderating online content: Fighting harm or silencing dissent? OHCHR. (2021, July 23). Retrieved October 15, 2021, from https://www.ohchr.org/EN/NewsEvents/Pages/Online-content-regulation.aspx.

Gillespie, T. (2018). CHAPTER 1. All Platforms Moderate. In Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media (pp. 1-23). New Haven: Yale University Press. https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300235029-001

Roberts, S. (2019). 2. Understanding Commercial Content Moderation. In Behind the Screen: Content Moderation in the Shadows of Social Media (pp. 33-72). New Haven: Yale University Press. https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300245318-003