Content Moderation across Social Media Platforms: Double standards, Donald Trump and Governance

By Charlotte Robertson – Tutorial #5

"Exploring Governance Structures on Social Media Platforms" by POLISEA is licensed under CC BY-NC-SA 2.0

What is Content Moderation?

Content Moderation is the practice of examining and employing a pre-determined set of rules and guidelines to user-generated content to determine, if the type of communication is permitted or not. The work of content moderators has been portrayed negatively in the past as it has been recognised as an unethical line of work but remains necessary as AI has not been developed enough to be able to complete the job and humans are still needed. It can be described as a place where employees can be fired for just making a few mistakes a week. Content moderation is needed to ensure the unfettered flow of information.

Read more:

Double standards of content moderation

Freedom of expression is one of the foundations upon which democracy is based (Gregorio 2020). This broad statement clashes with the disconcerting evolution of the algorithmic society, whereby artificial intelligence technologies police the stream of information online according to practical standards recognized by social media platforms. Online content moderation affects users’ fundamental rights and democratic values, especially since online platforms independently set their own requirements for content elimination (Gregorio 2020).

Unlawful online activity is hard to track, making it difficult to police. Users can choose to be anonymous on some platforms making it easier for illicit content to move across platforms without being identified (Gillespie, 2017). Social media platforms have taken on the responsibility of curating and policing the activity of their users, not just to meet legal requirements, but to avoid extra policies being forced on them by Government regulators. Platforms have also taken on their own policing to avoid losing offended or harassed users and to defend their commercial image. This activity is also pursued by the platforms in an effort to uphold their own personal and institutional ethics (Gillespie, 2017). However, legal actions against individuals come at a cost and only make sense in important and valuable cases.

Inequity caused by platform discretion rules

Platforms specifically detail what types of content is policed, including placement in blog posts, announcements and other platform rules. However, despite the efforts of reguators specifically focussed against terrorist and extremist content, these groups remain impervious to legal action as they fail to explicitly clarify which groups have been categorised as terrorist groups, which allow platforms choice in enforcement (Díaz, Hecht-Felella, pg.5 2021). Platforms content moderation is vastly moulded by government calls to implement an offensive strategy against these groups, for example, Islamic State of Iraq and Syria (ISIS) propaganda. These rules and regulations unreasonably lump speech from Muslim and Arabic-speaking communities into this category (Díaz, Hecht-Felella, pg.5 2021).).

Changing the behaviour of many individuals is much more difficult, whether it’s users sharing copyrighted music and films or people using the internet to harass others (Suzor, 2019). Any answer to this must implicate technology companies and internet intermediaries in some way because they have the power to influence a vast amount of uses through their design choices and policies (Suzor, 2019).

During the past 20 years, the copyright industry has enlisted a wide variety of technology companies and internet intermediaries to protect their interests (Suzor, 2019). However, regulation differs between countries, for example in the United States of America, platforms must regulate their user’s content because of a fundamental reluctance by regulators to restrict speech. In contrast, internationally these same platforms must deal with a wide variety of differing restrictions (Gillespie, 2017).

In addition to various global regulatory regimes platforms are forced to self-regulate as a response to public concerns. For example, the banning of Donald Trump from Facebook and Twitter, the removing of likes on Instagram and adding blue ticks to verify profiles. Self-regulation is an alternative to interference by governments, it allows the platforms to make their own choices about how they govern their user’s content which is not affected by the government (Solum, 2009).

Trump’s Controversial Ban from Social Media Platforms

Trump’s banning from social media was an attempt to shield against further attempts to incite violence and come after months of Trump’s uncompromising and unsupported accusations of voter fraud and his denial of his loss in the 2020 election (Denham 2021). The platforms have been encountered with whines of censorship from Trump’s allies and hesitant praise from others who see the attempts as long overdue (Denham 2021).

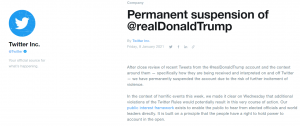

By Twitter Inc. https://blog.twitter.com/en_us/topics/company/2020/suspension

Twitter was the first social media platform to take permanent action against Trump and locked him out of his account for 12 hours as of Jan 6 after the Capitol assault. At the time Twitter also stated access wouldn’t be granted until three of Trump’s tweets were deleted as they violated content policy.

“Trump Facebook” by Book Catalog is licensed under CC BY 2.0http://https://www.youtube.com/watch?v=OBZoVpmbwPk

Similarly, Facebook banned Trump from the platform for 24 hours as of Jan. 6 after he posted a video telling his supporters to go home after they had violated the Capitol. The following day, the chief executive of Facebook Mark Zuckerburg announced that Trump would be banned indefinitely from the social media platform in an attempt to quash further violent acts from his supporters at the least until the transition was completed (Denham 2021) .

Read More: https://www.washingtonpost.com/technology/2021/01/11/trump-banned-social-media/

As of June 4 2020, the suspension of Trump will last for 2 years, Nick Clegg, VP of Global Affairs for Facebook stated “Given the gravity of the circumstances that led to Mr. Trump’s suspension, we believe his actions constituted a severe violation of our rules which merit the highest penalty available under the new enforcement protocols,”.

Read More: https://www.glamour.com/story/donald-trump-social-media-bans-twitter-facebook

Governments involvement in enforcing content moderation restrictions

Governments’ involvement in content moderation may counteract the freedom of expression users exert of social media platforms and mute some of our voices (Dawson 2020). However, it may also amplify voices of others who regularly don’t have the ability to be heard. For example, Donald Trump with his 72.6 million followers has become the most infamous politician on social media, especially Twitter. By banning him posting about political issues may mute some of our voices, it may also simultaneously amplify the voices of others (Dawson 2020).

The difficulty Governments face in attempting to regulate individual’s behaviors on social media platforms is in balancing individuals’ rights of expression with governments obligations to protect the vulnerable and preserve broader societal expectations of acceptable behavior. An approach by certain Governments which seeks to place content regulation obligations solely on the individual platforms seems destined to fail given the inherent conflict between the profit motive of the Platforms (derived from constant user and content growth) and the objective of regulations which have the effect of limiting certain content and driving away users attracted to it.

References

Dawson, M., 2021. Why Government Involvement in Content Moderation Could Be Problematic. [online] Impakter. <https://impakter.com/why-government-involvement-in-content-moderation-could-be-problematic/>

De Gregorio, G., 2020. Democratising online content moderation: A constitutional framework. [online] ScienceDirect. <https://www.sciencedirect.com/science/article/abs/pii/S0267364919303851https://www.sciencedirect.com/science/article/abs/pii/S0267364919303851>

Denham, H., 2021. These are the platforms that have banned Trump and his allies. [online] The Washington Post. <https://www.washingtonpost.com/technology/2021/01/11/trump-banned-social-media/>

Diaz, A. and Hecht-Fellela, L., 2021. Double Standards in Social Media Content Moderation. [online] Brennancenter.org. <https://www.brennancenter.org/sites/default/files/2021-08/Double_Standards_Content_Moderation.pdf>

Gillespie, Tarleton (2017) ‘Governance by and through Platforms’, in J. Burgess, A. Marwick & T. Poell (eds.), The SAGE Handbook of Social Media, London: SAGE, pp. 254-278

Newton, C., 2019. The secret lives of Facebook moderators in America. [online] The Verge. <https://www.theverge.com/2019/2/25/18229714/cognizant-facebook-content-moderator-interviews-trauma-working-conditions-arizona>

Blog.twitter.com. 2021. Permanent suspension of @realDonaldTrump. [online] <https://blog.twitter.com/en_us/topics/company/2020/suspension>

Tannenbaum, E., 2021. Donald Trump Is Banned From Facebook for Two Years. [online] Glamour. <https://www.glamour.com/story/donald-trump-social-media-bans-twitter-facebook>

Solum, L. (2009). Models of Internet Governance. In L. Bygrave & J. Bing, Internet Governance: Infrastructure and Institutions (p. A single page). Oxford Scholarship Online. https://oxford.universitypressscholarship.com/view/10.1093/acprof:oso/9780199561131.001.0001/acprof-9780199561131-chapter-3

Suzor, Nicolas P. 2019. How Copyright Shaped the Internet. In Lawless: the secret rules that govern our lives. Cambridge, UK: Cambridge University Press. pp. 92-122.

Youtube, 2014. What is Content Moderation?. <https://www.youtube.com/watch?v=zYKQmtrPTOY>