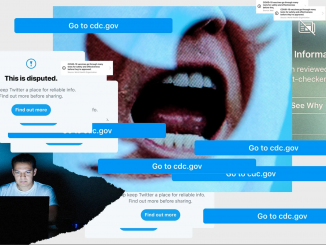

With the development of the internet and the appearance of the social media platforms such as Facebook, Twitter, and TikTok where they encourage users to contribute the content for the public not just by assessing the content. However, this leads to a problem some of the content they contribute for the public is not appropriated, harmful and misleading. This is where content moderation should take place to deal with this problem and provide a healthy online environment for the user. An attempt for this can be achieved by filtering any low-quality and vulgar information or giving a warning about the content that the user desire to access, however, there are two important issues relating to content moderation: cultural boundary and human labor. Then, we will discuss whether the government should play an important role in implementing social media content moderation or not.

The chanllenge of implementing the content moderation for digital platforms

- Diverse cultural lead to multi-decision on content moderation

As Tarleton (2018) argued that the rise of the platforms has provided people with freedom of speech on the internet and the generated-content ability in social media platforms which emphasizing the importance of governance over the content in those platforms. Tarleton (2018) also pinpointed that “platforms don’t just mediate public discourse, they constitute it… towards particular ends” (p.257), this means that social media platforms work like a small-scale society where there are many contributions of content from any kind of people from all over the world. This can explain that in some cases need to consider how to moderate the content differently based on the location that the content approaches the user. Hence, Tarleton (2018) argued it is difficult to draw the line of acceptance in moderating the content.

Tarleton (2018) mentioned Egeland’s situation where he posted a picture about Kim Phuc, naked children suffered from napalm burns and get suspended by Facebook’s moderators. Facebook Vice President Justin Osofsky (as cited in Tarleton, 2018) then proposed that it is difficult to make a decision about these contents because “some images may be offensive in one part of the world and acceptable in another, and even with a clear standard”.

On the internet, the image is not the only source of information that needs to be censored, there are many other kinds of information that users can approach such as lengthy and short films, music, audio, or live broadcasts. These types of information are more challenging to deal due with because they need an amount of time and knowledge before deciding whether it is culturally appropriate for the society that these types of content take place. This is why Tarleton (2018) argued: “moderation shapes social media platforms … as cultural phenomena”.

- The human workforce of content moderation

The amount of content on digital platforms appears more and more which makes it become harder to censor all of them perfectly. This leads to the lack of the necessary workforce to check the validity of the content before allowing them to appear on any specific digital platforms. This explains why it “requires immense human resources”, but those people who work as moderators are usually just “a handful of full-time employees”. (Tarleton, 2018, p.266). Additionally, the content moderation process takes an amount of time and effort such as review the content, take down the content, give a warning for a user who posts that content. Therefore, it needs to be divided equally for different people which explains why many big companies have “a group of people who provide a frontline review of specific content and incidents beneath the internal moderation team” (Tarleton, 2018, p.266).

The aforementioned cultural problem also indicates that we need a diversity of moderators which means these moderators must have the ability to deal with that content despite the cultural boundaries. This leads to the formation of community-moderator such as Reddit, for instance, they are given “tools by the platform that make possible the enforcement of group policies”. However, this is just a temporary method because they are just volunteers who work without being paid, and sometimes they are not there to deal with the content at that time which may let some of the illegal and harmful content go into these digital platforms.

Attempts to implement content moderation and controversy around the attempts.

The internet is not the only thing that has a great development, but also a variety of advanced technologies are. The most important of which must be the development of artificial intelligence (AI) in the technology industry. The AI has been practically applied to content moderation and proves its efficiency for managing content on digital platforms because users are now approaching real-time content so fast that human moderators are not quick enough to respond to them. With the help of the AI system, content moderation on social media can be achieved efficiently, rapidly, and accurately.

Although the AI system has proved its efficiency and cheap investment in moderating the content, automating moderation still has some pitfalls. Sinnereich (as cited in Tarleton, 2018) argued that the online policy that the AI system used needs to adapt over time similar to the regulated liability that existed in our society. As I mentioned, AI is still an advanced machine so it can not distinguish and “account for context, subtlety, sarcasm, and subcultural meaning” (Duarte, as cited in Tarleton, 2018). Moreover, AI depends a lot on the pre-defined algorithm for the content moderation process, but not all of them. Sometimes the AI will be placed in a situation that needs to use a function that is not yet implemented which then indicates that human moderators still play an important role in content moderation over digital platforms.

Should government have a huge impact on the content moderation process over social media?

Although government plays an important role in protecting the right of the citizen in real life, they should not involve in regulating the content review of social media. Many people prefer using social media platforms because they provide freedom of speech. Because the platforms do not produce the content themselves, they only host cyberspace and circulate the content of their users (Tarleton, 2018). If the government intervenes too deep in the content moderation process, they might censor some of the content that they do not want to see from the user. Therefore, this will lead to media bias which Samples (2019) indicated “online bias lately been a concern”.

Personally, I think that the intervene of the government in content moderation may discourage people from using it because of these platforms, but the advantage of having the government control the social will definitely outweigh the disadvantages. Terrorist organizations, for example, have become more adept at using social media platforms, but with the governance by Western governments, these organizations are easily taken down (Tarleton, 2018). Another consideration could be seen in the popularity of the hate speech and discrimination phenomenon. However, “Germany and France both have laws prohibiting the promotion of Nazism, anti-Semitism, and white supremacy” (Tarlenton, 2018, p.260).

Conclusion

In conclusion, the internet has developed rapidly as well as digital platforms and this leads to many problems such as harmful content that exist on these platforms. This is where content moderation needs to take place and become an important element that protects users and provides a healthy environment to live in. Although it is an important task of almost every digital platform, they still have some problems such as cultural boundary and human labor. This has been recently solved by the development of artificial intelligence (AI). Similarly, the AI is not a comprehensive machine so that sometimes the content moderation can not be solved automatically which means we still need a human moderator.

References:

Appen. (2021, January 23). Content Moderation [Video]. Youtube. https://www.youtube.com/watch?v=zuJ7v41P0KA

Gillespie, T. (2020). Content moderation, AI, and the question of scale. Big Data & Society, 7(2), 1-5. https://doi.org/10.1177/2053951720943234

Gillespie, T. (2018). Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media. New Haven: Yale University Press. https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300235029

Gillespie, T. (2018). Governance by and through Platforms, in J. Burgess, A. Marwick & T. Poell (eds.), The SAGE Handbook of Social Media, London: SAGE, pp. 254-278.

Samples, J. (2019). Why the Government Should Not Regulate Content Moderation of Social Media. Policy Analysis, 865, 1-30. https://www.cato.org/policy-analysis/why-government-should-not-regulate-content-moderation-social-media

Examining the issues with digital platforms’ content moderation by The Hung Pham is licensed under a Creative Commons Attribution 4.0 International License.