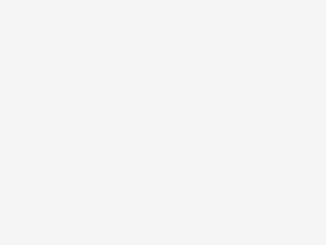

“On the Internet, nobody knows you’re a dog.” by Ben Lawson is licensed under CC BY-NC-ND 2.0

“On the Internet, nobody knows you’re a dog.” by Ben Lawson is licensed under CC BY-NC-ND 2.0

The growth of the Internet has prompted the need for online information auditing, not only for the protection of legitimate business interests, but also for the protection of individual rights and the reduction of cybercrime. For digital platforms with a large volume of information, it is no exaggeration to say that the quality of the entire platform is determined by how well the information is audited. Strict auditing of online information content is necessary, but there are now cases where excessive auditing damages the interests of users and destroys the general user experience.

The protection of minors also limits the normal use of the Internet by adults

The proportion of minors online is increasing, and children have been using electronic devices almost since before they could read and write. In order to prevent online content from having a bad influence on minors who are not yet psychologically developed enough, digital platforms have made various restrictions on their content. For example, some platforms directly block certain text, automatically detect gory and pornographic pictures, and automatically delete normal pictures identified by machines as indecent pictures, which seriously affects user experience. Including the reverse modification of online game content. In fact, just like various film and television works, comic novels, etc. marked with the suitable age of readers, making suggestions on the age of game players is a solution, and now various online games will also indicate the suitable age of the game. Since this situation is difficult to avoid completely, it is better to guide and educate the children correctly. “Sekiro-Shadows-Die-Twice-070319-002” by instacodez is marked with CC PDM 1.0

“Sekiro-Shadows-Die-Twice-070319-002” by instacodez is marked with CC PDM 1.0

Restrictions on the dissemination of artworks

Many works of art, such as paintings and sculptures, often feature naked bodies, which some people consider to be indecent. In fact, this issue has been controversial for centuries, not just now, but for example in the 16th century when men were forced to “put on their pants” in The Last Judgement. The mosaic of certain parts of artwork in online articles and the coding of famous sculptures that appear in the news are all the result of inappropriate content censorship. The line between art and pornography has always been a troubling issue, as well as whether certain acts of violence are intended to entertain or harm. “These questions plague efforts to moderate questionable content, and they hinge not only on different values and ideologies but also on contested theories of psychological impact and competing politics of culture ”(Gillespie, 2018, p.10). “Michelangelo – The Last Judgment” by Pierre Metivier is licensed under CC BY-NC 2.0

“Michelangelo – The Last Judgment” by Pierre Metivier is licensed under CC BY-NC 2.0

Works with special meaning are forced to be deleted because of illegal or indecent content

For example, the photograph “Terror of War”, which showed the cruelty of war, was deleted because it contained nude children. “In many cases, there’s no clear line between an image of nudity or violence that carries global and historic signifi-cance and one that doesn’t.”“Some images may be offensive in one part of the world and acceptable in another ”(Gillespie, 2018, p.3). In this case, we can only try to strike a balance between policy and ethics, but whether or not certain special photography can be published on the platform should not be judged entirely by the platform’s policy rules.

Why Content Moderation Costs Social Media Companies Billions

Internet Copyright Disputes

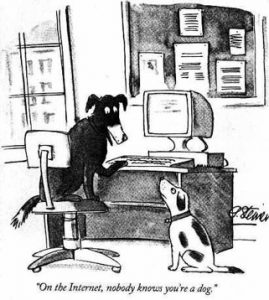

With the development of the Internet, the issue of copyright of online works has gradually been emphasized, and the relevant laws have appeared in various countries and regions and gradually improved, but there are still many problems to be solved regarding the review of specific infringements. “While automated systems may help find and quickly take down more dehumanising racist attacks, they also risk entrenching unjust rules in a rapid, global, and inscrutable fashion ”(Gorwa et al, 2020, p.11). Most online platforms now use machine intelligence auditing, with software that automatically captures keywords and media images, but the number of users on some active sites is so large that it exceeds the software’s ability to analyze them. “ssues of scale aside, the complex process of sorting useruploaded material into either the acceptable or the rejected pile is far beyond the capabilities of software or algorithms alone ”(Roberts, 2019, p.34). For example, in YouTube’s review system, Content ID monitors video and audio separately, compares the database, analyzes for infringement, and also takes into account specific cultural and ethical aspects. This is a very complex operation that so far has not been perfect, so there are cases where similar content is judged to be plagiarized or infringing, and videos are automatically cleaned up or require further review, affecting the user experience. “Copyright Football by #Banksy” by dullhunk is licensed under CC BY-NC-SA 2.0

“Copyright Football by #Banksy” by dullhunk is licensed under CC BY-NC-SA 2.0

‘It’s the worst job and no-one cares’ – BBC Stories

Harm to content reviewers

With today’s technology, the system review still has imperfections, but some content needs to be reviewed by human beings with some judgment. Their daily job is to watch the content uploaded by users and determine whether there is inappropriate content, such as violence and pornography, extremist speech, etc. Their work can cause considerable damage to the human mental condition. “A Microsoft spokesperson, in a significant understatement, described content moderation work as ‘a yucky job’”(Roberts, 2019, p.39). Not only that, some companies do not provide them with adequate technical training and mental health guidance. “Workers here say the companies do not provide adequate support to address the psychological consequences of the work”(Dwoskin, 2019). In addition, there are a large number of outsourced auditors who are paid low salaries and do high-intensity work are not treated reasonably, and how to balance the importance and treatment of this profession is also a problem that needs to be solved.

The scope of government control

The government should and has the obligation to restrict online content, but it should not use the method of outright banning everything with hidden dangers, which is a deprivation of users’ legitimate rights. It is more important for the government to deal with illegal content on the Internet in a timely manner than to impose too many restrictions. “Understanding the ways in which toxic technocultures develop, are sustained, and exploit platform design is imperative”(Massanari, 2017, p.342). In particular, illegal content such as online violence, pornography, online fraud, terrorist organizations/cults, sale of prohibited items and illegal service provision. “At the center of both of these policy responses is a calculation about how best to limit audience exposure to extremist narratives and maintain the marginality of extremist views, while being conscious of rights to free expression and the appropriateness of restrictions on speech”(Ganesh&Bright, 2020, p.6). Some extremists use social media platforms to propagate, recruit and share information, and this is something that needs to be cracked down on in cooperation with the government and platforms. It is this content that should be controlled, not what games minors play and artwork in which naked bodies are coded. The online environment has clearly improved compared to what it was years ago. “Data Security Breach” by Visual Content is licensed under CC BY 2.0

“Data Security Breach” by Visual Content is licensed under CC BY 2.0

Conclusion

There should not be completely open online platforms, but neither should there be too many restrictions where users cannot maintain trust in the platforms; most platforms simply provide a service that allows users to distribute certain content, and legally, the platforms are not responsible for the content uploaded by users. Transparency and freedom of expression in censorship of online platforms may never be balanced, but changing standards in a timely manner in response to changing social circumstances is exactly what Internet content censorship needs to do.

References

Dwoskin, E., Whalen, J., & Cabato, R. (2019). In the Philippines, content moderators at YouTube, Facebook and Twitter see the worst of the web and suffer silently. The Washington Post. Retrieved July 25, 2019, from https://www.washingtonpost.com/technology/2019/07/25/social-media-companies-are-outsourcing-their-dirty-work-philippines-generation-workers-is-paying-price/

Ganesh, B., & Bright, J. (2020). Countering Extremists on Social Media: Challenges for Strategic Communication and Content Moderation. Policy and Internet, 12(1), 6–19. https://doi.org/10.1002/poi3.236

Gillespie, T. (2018). Custodians of the internet : platforms, content moderation, and the hidden decisions that shape social media . Yale University Press.

Gorwa, R., Binns, R., & Katzenbach, C. (2020). Algorithmic content moderation: Technical and political challenges in the automation of platform governance. Big Data & Society, 7(1), 205395171989794–. https://doi.org/10.1177/2053951719897945

Lydia, E. (2019). Facebook content moderators sue over psychological trauma. In PRI’s The World. Public Radio International, Inc. Retrieved December 12, 2019, from https://www.pri.org/stories/2019-12-12/facebook-content-moderators-sue-over-psychological-trauma

Massanari, A. (2017). Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

Roberts, S. T. (2019). Behind the Screen : Content Moderation in the Shadows of Social Media . Yale University Press,. https://doi.org/10.12987/9780300245318

This work is licensed under a Creative Commons Attribution 4.0 International License.